- News and Announcements

- Product Introduction

- Purchase Guide

- Development Guidelines

- Demo Zone

- Download

- Chat Interaction (UI Included)

- TUIKit Library

- Getting Started

- Integrating TUIKit

- Only integrate chat

- Build Basic Interfaces

- Modifying UI Themes

- Setting UI Styles

- Implementing Local Search

- Integrating Offline Push

- User Online Status

- Typing Status

- Message Read Receipt

- Message Reactions

- Message Quotation

- Internationalization

- Adding Custom Messages

- Emoji & Stickers

- Custom UI components

- Video Call (UI Included)

- Overview (TUICallKit)

- Activate Service(TUICallKit)

- Integration(TUICallKit)

- UI Customization (TUICallKit)

- Additional Features(TUICallKit)

- API Documentation(TUICallKit)

- Release Notes (TUICallKit)

- Push Feature

- Desk

- More Practices

- No UI Integration

- SDK Integration

- Initialization

- Login and Logout

- Message

- Message Overview

- Sending Message

- Receiving Message

- Historical Message

- Forwarding Message

- Modifying Message

- Message Inserting

- Deleting Message

- Clearing Messages

- Recalling Message

- Online Message

- Read Receipt

- Querying Message

- Group @ Message

- Targeted Group Message

- Notification Muting

- Message Extension

- Message Reaction

- Message Translation

- Voice-to-Text

- Message Pinning

- Conversation

- Group

- Community Topic

- User Profile and Relationship Chain

- Offline Push

- Local Search

- Signaling

- Client APIs

- Server APIs

- Generating UserSig

- RESTful APIs

- RESTful API Overview

- RESTful API List

- Message Related

- Session Related

- Group Related

- Group Management

- Group Information

- Group Member Management

- Group Member Information

- Group Custom Attributes

- Live Group Management

- Setting Live Streaming Group Robots

- Deleting Live Streaming Group Robots

- Setting/Deleting Live Streaming Group Administrators

- Obtaining the List of Live Streaming Group Administrators

- Checking Whether Users Are in a Live Streaming Group

- Getting the Number of Online Users in an Audio-Video Group

- Getting the List of Online Members in Audio-Video Group

- Setting Audio-Video Group Member Marks

- Getting the List of Banned Group Members

- Community Management

- Creating Topic

- Deleting Topic

- Getting Topic Profile

- Modifying Topic Profile

- Importing Topic Profiles

- Permission Group Management

- Creating Permission Groups

- Terminating Permission Groups

- Modifying Permission Group Information

- Obtaining Permission Group Information

- Adding Topic Permissions

- Modifying Topic Permissions

- Deleting Topic Permissions

- Obtaining Topic Permissions

- Adding Members to a Permission Group

- Deleting Permission Group Members

- Obtaining Permission Group Member List

- Group Counter

- User Management

- Global Mute Management

- Operations Management

- Chatbots

- Official Account Management

- Webhooks

- Webhook Overview

- Webhook Command List

- Operations Management Callbacks

- Online Status Webhooks

- Relationship Chain Webhooks

- One-to-One Message Webhooks

- Group Webhooks

- Before a Group Is Created

- After a Group Is Created

- Before Applying to Join a Group

- Before Inviting a User to a Group

- After a User Joins a Group

- After a User Leaves a Group

- Before Group Message Is Sent

- After a Group Message Is Sent

- After a Group Is Full

- After a Group Is Disbanded

- After Group Profile Is Modified

- Callback After Recalling Group Messages

- Webhook for Online and Offline Status of Audio-Video Group Members

- Webhook for Exceptions When Group Messages Are Sent

- Before a Topic Is Created

- After a Topic Is Created

- After a Topic Is Deleted

- Topic Profile Change Webhook

- Callback After Group Member Profile Changed

- Callback After Group Attribute Changed

- Callback After Read Receipt

- Callback After the Group Owner Changed

- Webhooks related to the Official Account

- Before a Official Account Is Created

- After a Official Account Is Created

- After Official Account Profile Is Modified

- After Official Account Is Destroyed

- Before Official Account Is Subscribed

- After Official Account Is Subscribed

- After a Official Account Is Full

- After Official Account Is Unsubscribed

- Before Official Account Message Is Sent

- Callback After Sending an Official Account Message

- Webhook After Recalling Official Account Messages

- Console Guide

- FAQs

- Security Compliance Certification

- Chat Policies

- Migration

- Error Codes

- Contact Us

- News and Announcements

- Product Introduction

- Purchase Guide

- Development Guidelines

- Demo Zone

- Download

- Chat Interaction (UI Included)

- TUIKit Library

- Getting Started

- Integrating TUIKit

- Only integrate chat

- Build Basic Interfaces

- Modifying UI Themes

- Setting UI Styles

- Implementing Local Search

- Integrating Offline Push

- User Online Status

- Typing Status

- Message Read Receipt

- Message Reactions

- Message Quotation

- Internationalization

- Adding Custom Messages

- Emoji & Stickers

- Custom UI components

- Video Call (UI Included)

- Overview (TUICallKit)

- Activate Service(TUICallKit)

- Integration(TUICallKit)

- UI Customization (TUICallKit)

- Additional Features(TUICallKit)

- API Documentation(TUICallKit)

- Release Notes (TUICallKit)

- Push Feature

- Desk

- More Practices

- No UI Integration

- SDK Integration

- Initialization

- Login and Logout

- Message

- Message Overview

- Sending Message

- Receiving Message

- Historical Message

- Forwarding Message

- Modifying Message

- Message Inserting

- Deleting Message

- Clearing Messages

- Recalling Message

- Online Message

- Read Receipt

- Querying Message

- Group @ Message

- Targeted Group Message

- Notification Muting

- Message Extension

- Message Reaction

- Message Translation

- Voice-to-Text

- Message Pinning

- Conversation

- Group

- Community Topic

- User Profile and Relationship Chain

- Offline Push

- Local Search

- Signaling

- Client APIs

- Server APIs

- Generating UserSig

- RESTful APIs

- RESTful API Overview

- RESTful API List

- Message Related

- Session Related

- Group Related

- Group Management

- Group Information

- Group Member Management

- Group Member Information

- Group Custom Attributes

- Live Group Management

- Setting Live Streaming Group Robots

- Deleting Live Streaming Group Robots

- Setting/Deleting Live Streaming Group Administrators

- Obtaining the List of Live Streaming Group Administrators

- Checking Whether Users Are in a Live Streaming Group

- Getting the Number of Online Users in an Audio-Video Group

- Getting the List of Online Members in Audio-Video Group

- Setting Audio-Video Group Member Marks

- Getting the List of Banned Group Members

- Community Management

- Creating Topic

- Deleting Topic

- Getting Topic Profile

- Modifying Topic Profile

- Importing Topic Profiles

- Permission Group Management

- Creating Permission Groups

- Terminating Permission Groups

- Modifying Permission Group Information

- Obtaining Permission Group Information

- Adding Topic Permissions

- Modifying Topic Permissions

- Deleting Topic Permissions

- Obtaining Topic Permissions

- Adding Members to a Permission Group

- Deleting Permission Group Members

- Obtaining Permission Group Member List

- Group Counter

- User Management

- Global Mute Management

- Operations Management

- Chatbots

- Official Account Management

- Webhooks

- Webhook Overview

- Webhook Command List

- Operations Management Callbacks

- Online Status Webhooks

- Relationship Chain Webhooks

- One-to-One Message Webhooks

- Group Webhooks

- Before a Group Is Created

- After a Group Is Created

- Before Applying to Join a Group

- Before Inviting a User to a Group

- After a User Joins a Group

- After a User Leaves a Group

- Before Group Message Is Sent

- After a Group Message Is Sent

- After a Group Is Full

- After a Group Is Disbanded

- After Group Profile Is Modified

- Callback After Recalling Group Messages

- Webhook for Online and Offline Status of Audio-Video Group Members

- Webhook for Exceptions When Group Messages Are Sent

- Before a Topic Is Created

- After a Topic Is Created

- After a Topic Is Deleted

- Topic Profile Change Webhook

- Callback After Group Member Profile Changed

- Callback After Group Attribute Changed

- Callback After Read Receipt

- Callback After the Group Owner Changed

- Webhooks related to the Official Account

- Before a Official Account Is Created

- After a Official Account Is Created

- After Official Account Profile Is Modified

- After Official Account Is Destroyed

- Before Official Account Is Subscribed

- After Official Account Is Subscribed

- After a Official Account Is Full

- After Official Account Is Unsubscribed

- Before Official Account Message Is Sent

- Callback After Sending an Official Account Message

- Webhook After Recalling Official Account Messages

- Console Guide

- FAQs

- Security Compliance Certification

- Chat Policies

- Migration

- Error Codes

- Contact Us

Description

The Voice-to-Text feature can recognize your sent or received successfully voice messages, and convert them into text.

Note:

Voice-to-Text is a value-added paid feature, currently in beta. You can contact us through the Telegram Technical Support Group to enable a full feature experience.

This feature is supported only by the Enhanced SDK v7.4 or later.

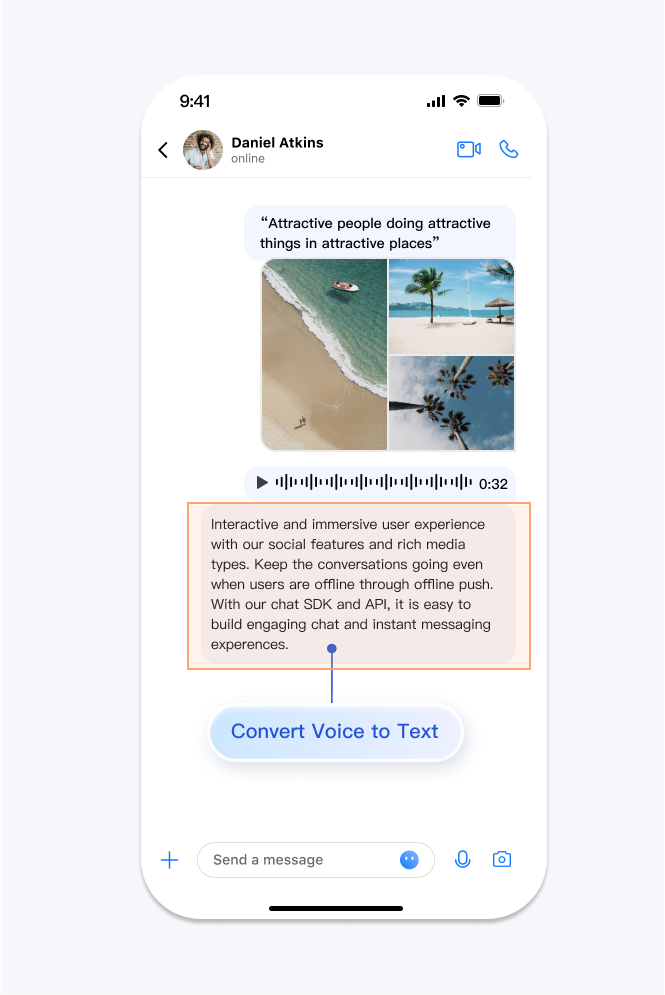

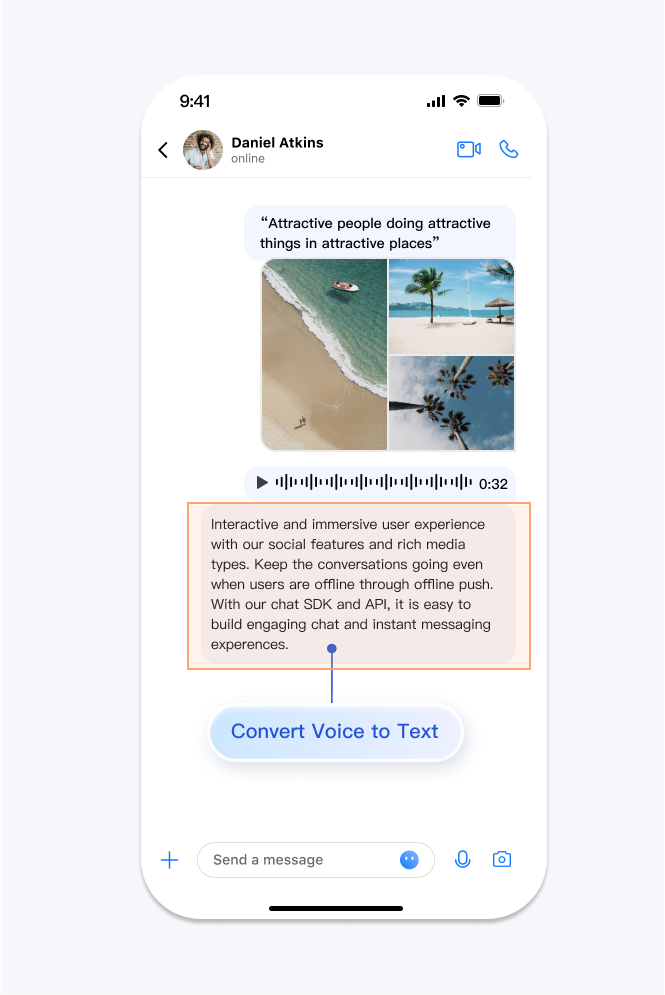

Display Effect

You can use this feature to achieve the text conversion effect shown below:

API Description

Speech-to-Text

You can call the

convertVoiceToText (Android/iOS and macOS/Windows) interface to convert voice into text.The description of the interface parameters is as follows:

Input Parameters | Meaning | Description |

language | Identified Target Language | 1. If your mainstream users predominantly use Chinese and English, the language parameter can be passed as an empty string. In this case, we default to using the Chinese-English model for recognition. 2. If you want to specify the target language for recognition, you can set it to a specific value. For the languages currently supported, please refer to Language Support. |

callback | Recognition Result Callback | The result refers to the recognized text. |

Warning:

The voice to be recognized must be set to a 16k sampling rate, otherwise, it may fail.

Below is the sample code:

// Get the V2TIMMessage object from VMSV2TIMMessage msg = messageList.get(0);if (msg.elemType == V2TIM_ELEM_TYPE_SOUND) {// Retrieve the soundElem from V2TIMMessageV2TIMSoundElem soundElem = msg.getSoundElem();// Invoke speech-to-text conversion, using the Chinese-English recognition model by defaultsoundElem.convertVoiceToText("",new V2TIMValueCallback<String>() {@Overridepublic void onError(int code, String desc) {TUIChatUtils.callbackOnError(callBack, TAG, code, desc);String str = "convertVoiceToText failed, code: " + code + " desc: " + desc;ToastUtil.show(str,true, 1);}@Overridepublic void onSuccess(String result) {// If recognition is successful, 'result' will be the recognition resultString str = "convertVoiceToText succeed, result: " + result;ToastUtil.show(str, true, 1);}});}

// Get the V2TIMMessage object from VMSV2TIMMessage *msg = messageList[0];if (msg.elemType == V2TIM_ELEM_TYPE_SOUND) {// Retrieve the soundElem from V2TIMMessageV2TIMSoundElem *soundElem = msg.soundElem;// Invoke speech-to-text conversion, using the Chinese-English recognition model by default[soundElem convertVoiceToText:@"" completion:^(int code, NSString *desc, NSString *result) {// If recognition is successful, 'result' will be the recognition resultNSLog(@"convertVoiceToText, code: %d, desc: %@, result: %@", code, desc, result);}];}

template <class T>class ValueCallback final : public V2TIMValueCallback<T> {public:using SuccessCallback = std::function<void(const T&)>;using ErrorCallback = std::function<void(int, const V2TIMString&)>;ValueCallback() = default;~ValueCallback() override = default;void SetCallback(SuccessCallback success_callback, ErrorCallback error_callback) {success_callback_ = std::move(success_callback);error_callback_ = std::move(error_callback);}void OnSuccess(const T& value) override {if (success_callback_) {success_callback_(value);}}void OnError(int error_code, const V2TIMString& error_message) override {if (error_callback_) {error_callback_(error_code, error_message);}}private:SuccessCallback success_callback_;ErrorCallback error_callback_;};auto callback = new ValueCallback<V2TIMString>{};callback->SetCallback([=](const V2TIMString& result) {// Speech-to-text conversion successful, 'result' will be the conversion resultdelete callback;},[=](int error_code, const V2TIMString& error_message) {// Speech-to-Text Conversion faileddelete callback;});// Get the V2TIMMessage object from VMSV2TIMMessage *msg = messageList[0];// Retrieve the soundElem from V2TIMMessageV2TIMElem *elem = message.elemList[0];if (elem->elemType == V2TIM_ELEM_TYPE_SOUND) {V2TIMSoundElem *sound_elem = (V2TIMSoundElem *)elem;// Invoke speech-to-text conversion, using the Chinese-English recognition model by defaultsound_elem->ConvertVoiceToText("", &convertVoiceToTextCallback);}

Language Support

The currently supported target languages for recognition are as follows:

Supported Languages | Input Parameter Settings |

Mandarin Chinese | "zh (cmn-Hans-CN)" |

Cantonese Chinese | "yue-Hant-HK" |

English | "en-US" |

Japanese (Japan) | "ja-JP" |

Yes

Yes

No

No

Was this page helpful?