Low-Latency Live Streaming Upgraded Based on WebRTC (CSS)

1. Live Event Broadcasting (LEB) Overview

The fast advancement of the live streaming industry has ignited the development of a wealth of low-latency live streaming scenarios, typically live shopping and online education. For these use cases, the key requirement is real-time audio/video interaction, which cannot be well supported by traditional HLS- and FLV/RTMP-based live streaming technologies that feature a relatively high latency of several seconds. Therefore, Live Event Broadcasting (LEB) adopts WebRTC to implement a live streaming product solution with a latency of milliseconds. Besides live shopping and online education, the product can well meet the requirement of real-time interaction at a low latency in other scenarios such as sports and game live streaming.

2. WebRTC-Based LEB Scheme

After years of development, live streaming has had a standardized linkage. streamers use PCs or mobile phones to implement audio/video capturing and encoding on the client and push streams over RTMP to the cloud platform for live streaming. Then, the audio/video data is transcoded and transferred to users' devices via FLV and HLS protocols over the CDN network. On the entire linkage, the highest latency comes from RTMP stream push, CDN transfer, caching on the device, and playback after decoding. The traditional RTMP/FLV/HLS methods are based on the TCP protocol, which means that data tends to build up under poor network connections. What's more, to defend against TCP network fluctuations, device players usually need to cache one to two GOPs to ensure smooth playback.

WebRTC is based on the RTP/RTCP protocol and leverages an excellent congestion control algorithm to ensure low latency and high performance under poor network connections in the real-time audio/video field. Exactly based on WebRTC, LEB reconstructs stream pull in LVB to implement highly compatible, cost-effective, and large-capacity low-latency live streaming. The system reuses the cloud data processing capabilities in the original live streaming architecture to make the live streaming access side and CDN edge both WebRTC-based, so that the former can receive WebRTC streams, and the latter can support WebRTC negotiation and remuxing distribution in addition to the original FLV/HLS distribution capabilities. In this way, the low-latency LEB is not only compatible with LVB's cloud media processing features such as stream push, transcoding, recording, screencapturing, and porn detection, but also has the strong edge distribution capabilities of the traditional CDN network, which is able to support millions of concurrent online users. You can smoothly migrate your business from the existing LVB platform to LEB to implement low-latency live streaming applications.

LEB also uses WebRTC to achieve low latency across platforms. Most mainstream browsers, including Chrome and Safari, have supported WebRTC, so we can offer standard WebRTC capabilities via browsers. In addition, the well-established, open-source WebRTC SDK makes optimization and customization easy, allowing for the customization of an SDK with improved low-latency streaming features.

3. WebRTC Upgrade and Extension

The audio/video encoding format supported by the standard WebRTC no longer meets the requirements of the live streaming industry. Specifically, the video encoding formats supported by the standard WebRTC are VP8/VP9 and H.264, and the supported audio encoding format is Opus; however, H.264/H.265+AAC is used for audio/video stream push. In addition, to implement superior low-latency communications, the standard WebRTC doesn't support B-frame encoding, although B-frame encoding has been widely used in the live streaming industry as it can improve the compression ratio and save bandwidth costs. Therefore, transcoding is required to connect the standard WebRTC to existing live streaming systems, introducing extra latency and costs. It's necessary to upgrade the standard WebRTC to make it compatible with AAC (audio), H.265 (video), and B-frame encoding. The following details the WebRTC upgrade and extension in LEB.

3.1 AAC-LC/AAC-HE/AAC-HEv2

WebRTC performs negotiation based on SDP. To better support new features and be compatible with the standard WebRTC, LEB regulates the extended SDP definition and negotiation of WebRTC. To support the AAC format, the terminal SDK needs to add the AAC encoding information to an SDP offer and implement the AAC decoder, while the backend needs to implement the AAC negotiation logic and RTP packaging and delivery. For more information, see RFC 6416 and ISO/IEC 14496-3.

3.1.1 SDP offer

MP4-LATM and MP4A-ADTS can be used to declare AAC information in an SDP offer to indicate supporting AAC stream pull. The following sample SDP offer shows the AAC formats corresponding to two payload types, that is, MP4A-LATM/48K sample rate/dual channel and MP4A-LATM/44.1K sample rate/dual channel. Here, the sample rate in display mode is used, that is, the actual sample rate after extension. When "rtpmap" has the AAC declaration, the backend will deliver the AAC in the original stream first. ...a=rtpmap:111 opus/48000/2a=rtcp-fb:111 transport-cca=fmtp:111 minptime=10;useinbandfec=1a=rtpmap:120 MP4A-LATM/48000/2a=rtcp-fb:120 transport-cca=rtpmap:121 MP4A-LATM/44100/2a=rtcp-fb:121 transport-cc...

3.1.2 SDP answer

There are two SDP answers negotiated on the backend. One is the SDP answer before origin-pull in async mode. In this case, as the actual audio format is not parsed, the SDP answer usually copies the audio format information in the SDP offer and returns it to the client. In practice, the audio encoding format of the actual stream push and the negotiated payload type are preferentially used to deliver the audio RTP packet. In this case, each AAC frame needs the "AudioSpecificConfig" header information, which is regarded as in-band transfer. The other is the sync origin-pull mode, where the SDP answer is sent after the bitstream is parsed. At this point, the actual AAC sample rate needs to be parsed to match "rtpmap", and the "fmtp" field needs to be added to indicate the detailed AAC format. Below is a sample AAC-HE/44.1K/dual channel: ...a=rtpmap:121 MP4A-LATM/44100/2a=rtcp-fb:121 nacka=rtcp-fb:121 transport-cca=fmtp:121 SBR-enabled=1;config=40005724101fe0;cpresent=0;object=2;profile-level-id=1... Here, "SBR-enabled=1" indicates to enable spectral band replication (SBR), which means that the current AAC is AAC-HE. "SBR-enabled=0" means that the AAC is AAC-LC. "config" is "StreamMuxConfig", which can be used to parse the detailed AAC format. For more information, see ISO/IEC 14496-3. "cpresent=0" indicates out-of-band transfer, where the AAC header information appears in only SDP but not RTP streams. "cpresent=1" indicates in-band transfer, where the AAC header information doesn't appear in SDP and the "AudioSpecificConfig" header information needs to be added in each AAC frame. As AAC-HEv2 is AAC-HE plus parametric stereo (PS), it can be represented through "SBR-enabled=1" and "PS-enabled=1" fields. Note that if you need AAC ADTS, you can replace "MP4A-LATM" with "MP4-ADTS", after which the ASC header is replaced with the ADTS header in each frame during in-band transfer.

3.2 H.265

The SDK needs to add the H.265 information to an SDP offer to implement H.265 RTP fragmentation, parsing, framing, and decoding features, while the backend needs to implement the H.265 negotiation logic and RTP packaging and delivery features. In the negotiation logic, when the SDK's SDP offer lists both H.264 and H.265, the backend will deliver the encoded pushed video stream. If the player supports only H.264 but the pushed video stream is in the format of H.265, the backend needs to transcode the video to H.264. Below is a sample SDP offer: ...a=rtpmap:98 H264/90000a=rtcp-fb:98 goog-remba=rtcp-fb:98 nacka=rtcp-fb:98 nack plia=rtcp-fb:98 transport-cca=fmtp:98 level-asymmetry-allowed=1;packetization-mode=1;profile-level-id=42e01fa=rtpmap:100 H265/90000a=rtcp-fb:100 goog-remba=rtcp-fb:100 nacka=rtcp-fb:100 nack plia=rtcp-fb:100 transport-cc...

3.3 H.264/H.265 B-frame

The terminal SDK needs to add the B-frame information to an SDP offer to implement B-frame's timestamp processing logic of non-monotonic increase, while the backend needs to implement B-frame's timestamp encapsulation logic.

3.3.1 SDP B-frame negotiation

The "bframe-enabled=1" field of the SDP's "fmtp" is used to indicate the support for B-frames, and the backend will deliver the video data in the original stream. In the case of "bframe-enabled=0", the backend will transcode the B-frame. Below is a sample SDP offer: ...a=fmtp:98 bframe-enabled=1;level-asymmetry-allowed=1;packetization-mode=1;profile-level-id=42e01f...

3.3.2 B-frame's timestamp

RTP encapsulation is the same for data of the I-frame, P-frame, and B-frame, but the PTS for the B-frame does not monotonically increase and needs to be processed separately. In the original stream, PTS = DTS + CTS. LEB supports PTS and DTS in two ways: first, PTS is adopted for the RTP timestamp, and the client only needs to decode the information according to the sequence; second, DTS is adopted for the RTP timestamp, the CTS is transferred to the client via the RTP extension header, and the client calculates the PTS after parsing. Below is a sample SDP extmap: ...a=extmap:7 rtp-hrdext:video:CompistionTime... The above two ways are compatible. When the SDP offer contains the "extmap rtp-hrdext" field, the second way is used; otherwise, the first way is used.

3.4 Unencrypted transfer

The standard WebRTC is originally designed for audio/video communication scenarios; therefore, encryption is required. While in live streaming applications, encryption is not required, as many of the scenarios are open to the public. If there is no encryption, the latency generated by the DTLS handshake when the live streaming starts can be eliminated, and the costs of encryption/decryption on the backend and frontend can be saved.

3.4.1 Encrypted SDP offer

...m=audio 9 UDP/TLS/RTP/SAVPF 111 120 121 110...m=video 9 UDP/TLS/RTP/SAVPF 96 97 98 99 100... Here, "UDP/TLS/RTP/SAVPF" indicates the supported protocols for audio/video transfer; "UDP, TLS, and RTP" indicates using UDP to transfer RTP packets and TLS for encryption, that is, DTLS encryption; "SAVPF" indicates using the SRTCP feedback mechanism to control the transfer process.

3.4.2 Unencrypted SDP offer

...m=audio 9 RTP/AVPF 111 120 121 110...m=video 9 RTP/AVPF 96 97 98 99 100... Here, "RTP/AVPF" indicates that RTP and RTCP are unencrypted modes. The SDK has a dedicated encryption switch API to generate encrypted and unencrypted SDP information, while the backend supports encrypted and unencrypted delivery of the data.

3.5 Private data passthrough

3.5.1 SEI passthrough

In many scenarios, media data in addition to the audio/video data needs to be synced, such as the whiteboard content in online education and comments and interaction in live shopping. In this case, timestamped SEI NALUs can be a good choice. The backend passes through the SEI data, and the SDK sends the callback output to the application layer after parsing the SEI data.

3.5.2 Metadata passthrough

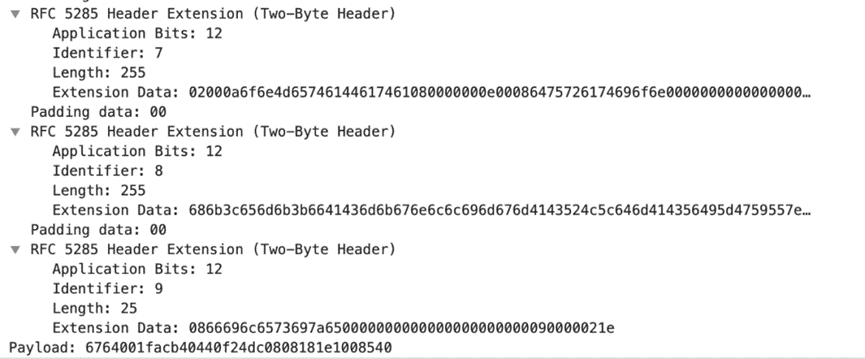

The backend uses the RTP extension header to pass through private media metadata at the container layer, facilitating customizing scenarios where data needs to be passed through. The SDK sends the callback output to the application layer after parsing the metadata. Below is a sample SDP offer: ...a=extmap:7 http://www.webrtc.org/experiments/rtp-hdrext/meta-data-01a=extmap:8 http://www.webrtc.org/experiments/rtp-hdrext/meta-data-02a=extmap:9 http://www.webrtc.org/experiments/rtp-hdrext/meta-data-03... The RTP header is extended as follows:

4. LEB SDK and Demo

The LEB SDK uses the native WebRTC for customization and extension. In addition to the standard WebRTC, it also supports: a) decoding and playback in AAC, including AAC-LC, AAC-HE, and AAC-HEv2; b) decoding and playback in H.265, including software and hardware; c) B-frame decoding in H.264 and H.265; d) SEI callback; e) encryption disablement; f) image screen-capturing, rotation, and zooming. The LEB SDK optimizes the performance of the native WebRTC, such as first image frame latency, frame sync, sync, jitter buffer, and NACK policies. It removes modules irrelevant to stream pull and playback and is about 5 MB in size after packaging. It includes ARM64 and ARM32 architectures. To facilitate connection, it provides a complete SDK and demo. The demo for web shows how to pull streams via the standard WebRTC on the web, and the demos for Android and iOS come with the stream pull and playback SDK, demo, and connection documentation.

4.1 Demo for web

http://webrtc-demo.tcdnlive.com/httpDemo.html

Scan the QR code to open the demo for web.

4.2 SDK and demo for Android

https://github.com/tencentyun/leb-android-sdk

Scan the QR code to open the SDK and demo for Android.

4.3 SDK and demo for iOS

https://github.com/tencentyun/leb-ios-sdk/

Scan the QR code to open the SDK and demo for iOS.

5. Summary and Outlook

LEB integrates the stream push feature of LVB and transforms CDN edge nodes to be WebRTC-based, ushering in an era of millisecond-level latency for live streaming. In addition, it upgrades and extends the standard WebRTC to connect to mainstream formats for pushing live audio/video streams in the Chinese mainland. Currently, LEB has been implemented in Tencent for online education and Tencent Penguin eSports, and top vendors in live streaming, education, and ecommerce in the Chinese mainland are also on the move. In the future, LEB will be more adapted to customer needs and improve the upstream push quality with the help of WebRTC. The aim is to provide more stable live streaming services at lower latency and more real-time interaction capabilities, contributing to a new era for live streaming.