Customer challenges:

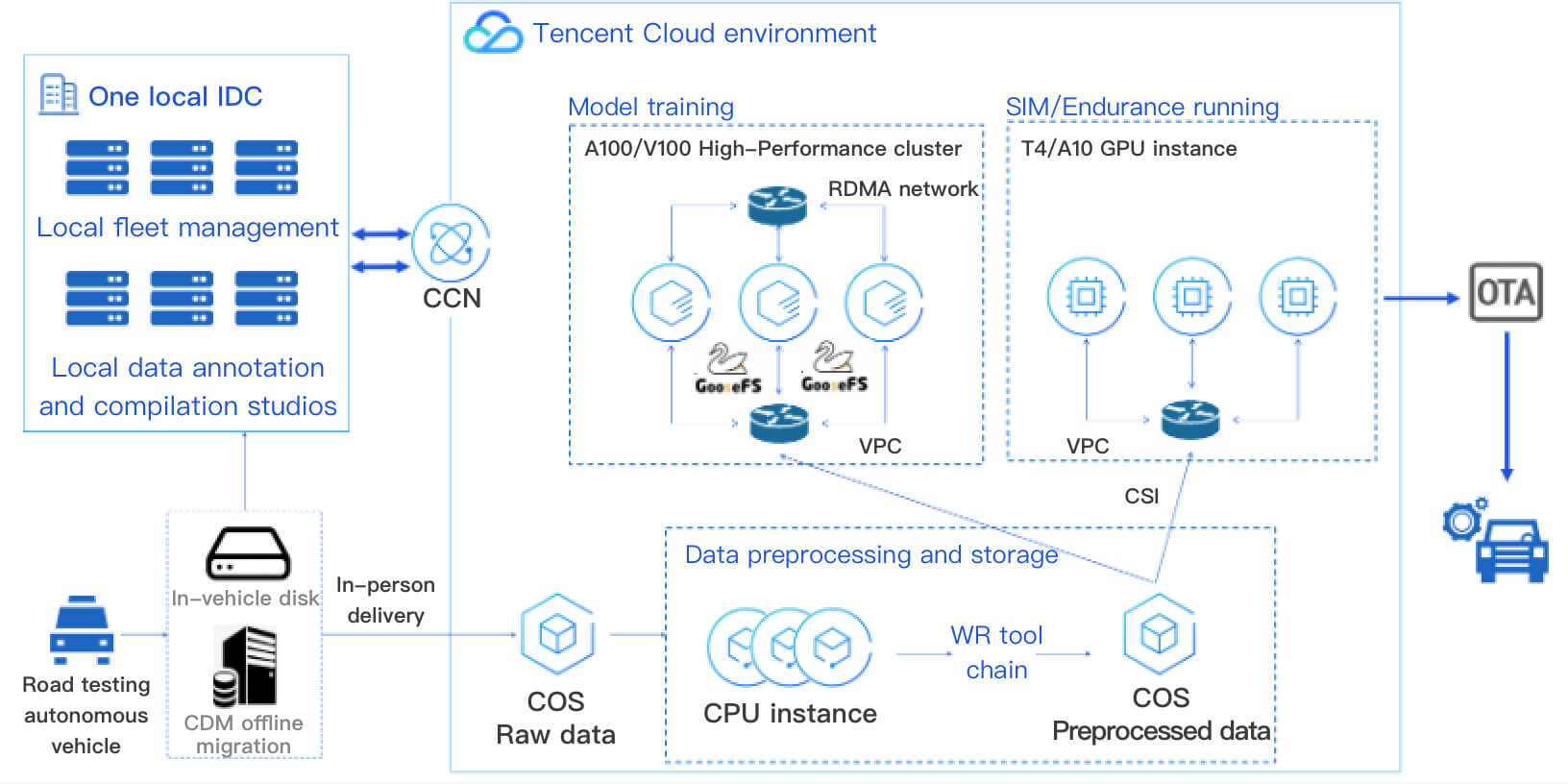

To deliver a highly immersive and vivid experience, virtual worlds rely on powerful computing for rendering and other highly demanding workloads. However, most mobile phones and other end-user devices don't have the hardware performance to sustain intense rendering. Moreover, software packages that contain the required rendering engines and other various materials often reach gigabytes in size, occupying massive storage space on the user’s device.

Solution:

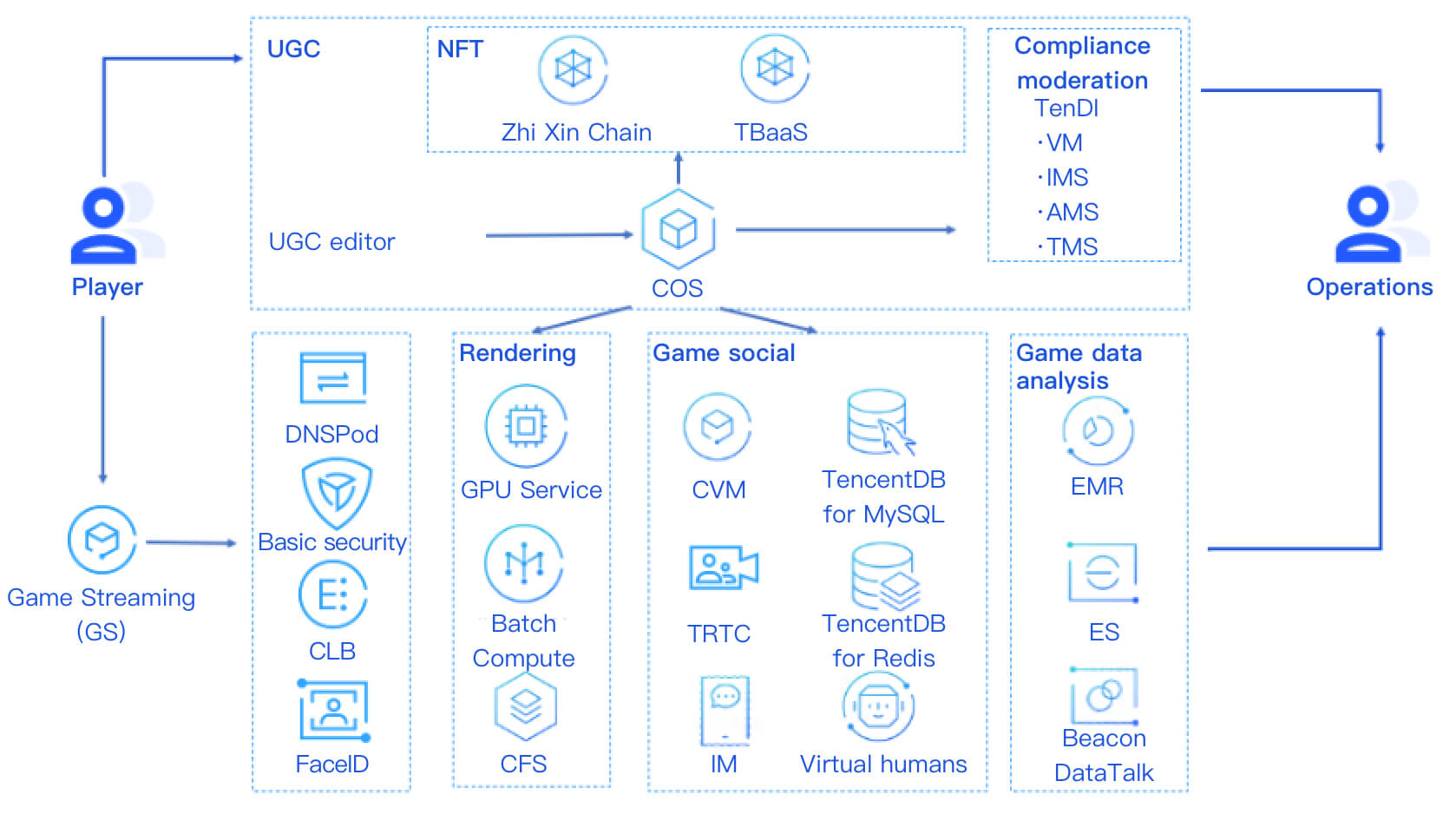

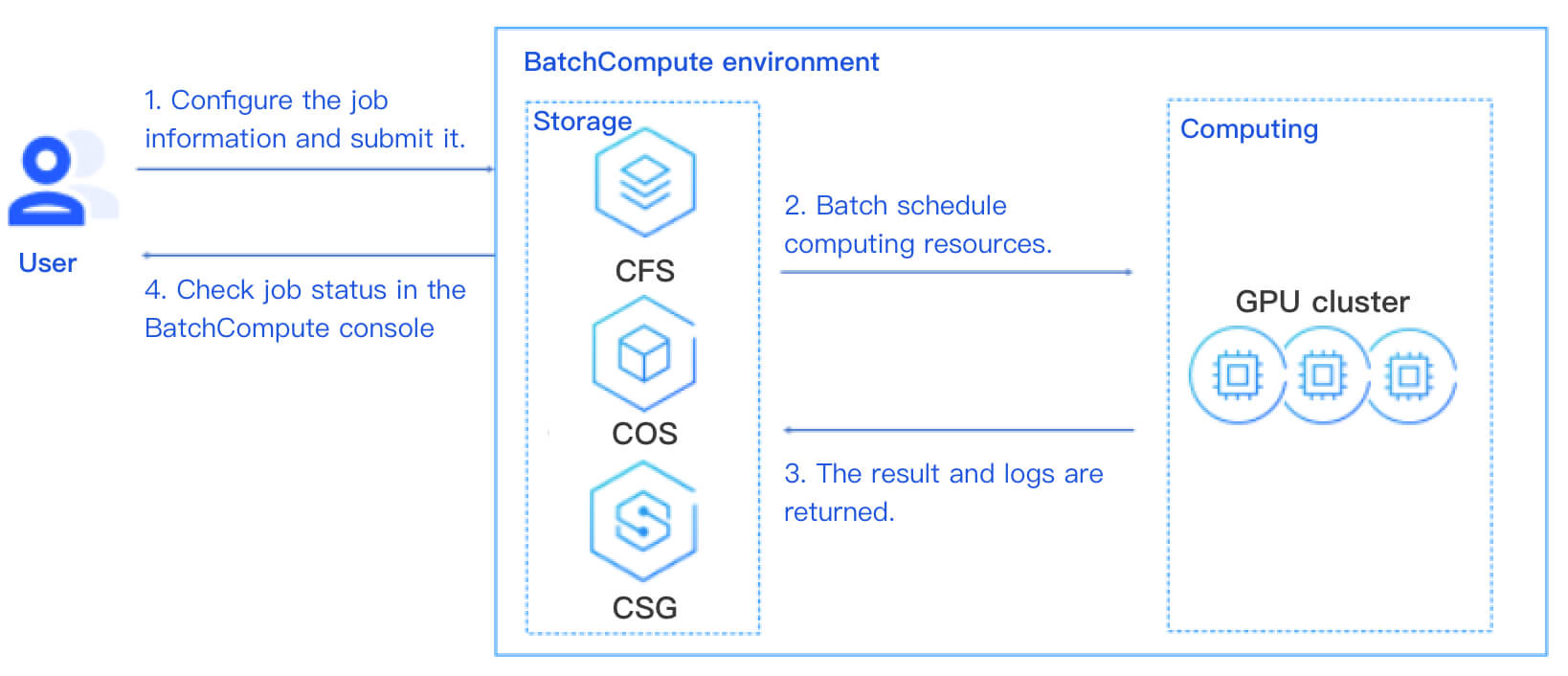

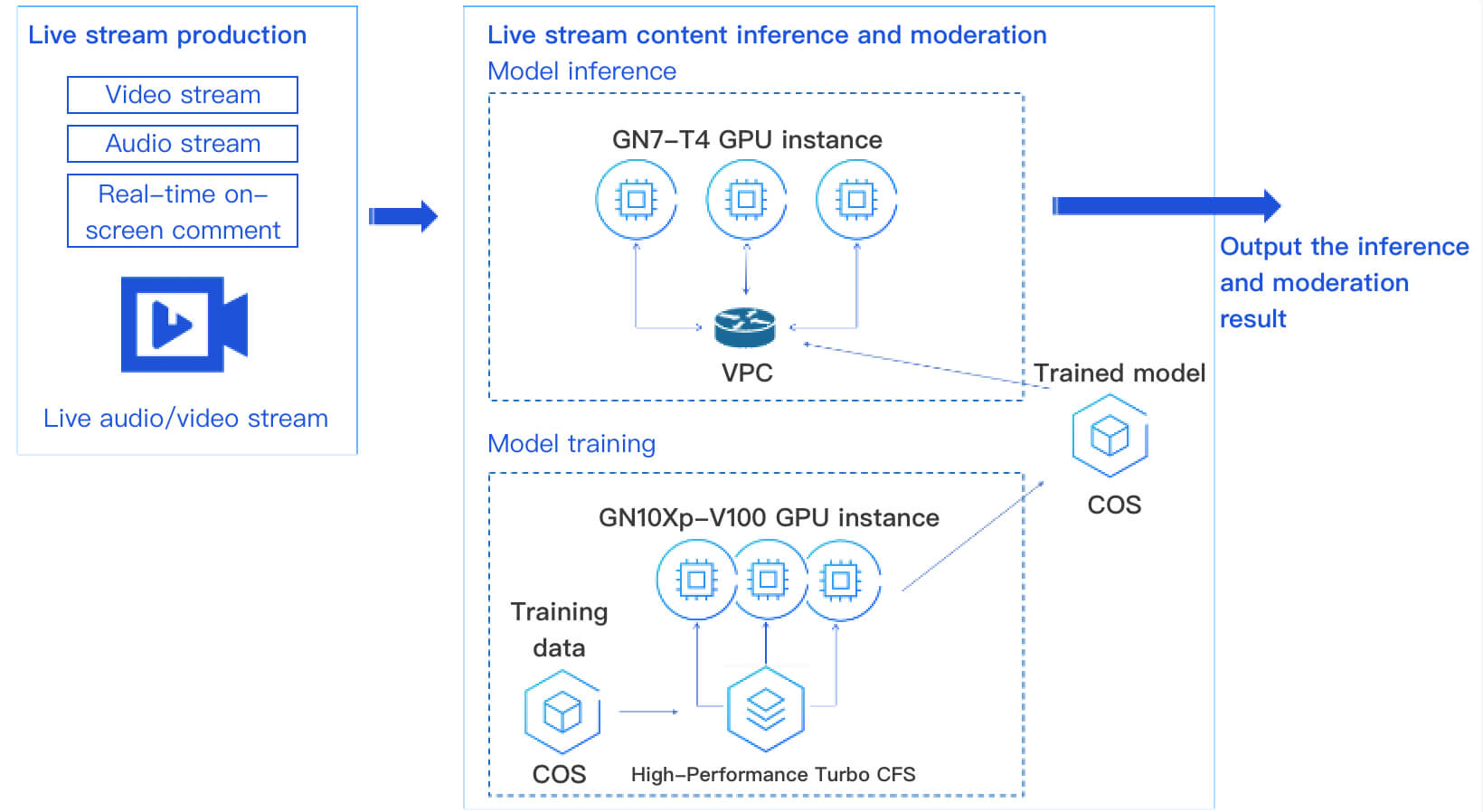

With Tencent Cloud's powerful GPU computing power, this solution integrates enterprise-grade rendering, qGPU-based container-level resource splitting and virtualization technologies, video encoding and decoding technologies, and cloud-based streaming solutions. It delivers high computing power for rendering in the cloud, so end users only need to connect to the network to access high-performance rendering. This frees up the resources and storage on end-user devices and delivers a smooth cloud-edge integration experience.

Benefits of cloud deployment:

The cloud native-based solution allows for canary release and rapid launch of your business. Elastic scaling allows you to schedule massive numbers of resources, so you can easily scale your business to adapt to peak and off-peak hours. Combined with the qGPU virtualization sharing technology featuring GPU computing power and VRAM isolation, it can greatly increase GPU utilization while reducing your enterprise costs.

The new-generation Cloud GPU Service provides high-density encoding computing power and a higher network performance. Joining hands with NVIDIA, it is China's first one-stop CloudXR solution and guarantees smooth, lightweight user experience for VR applications.