- Release Notes and Announcements

- Release Notes

- Announcements

- Security Vulnerability Fix Description

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- Policy Management

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Implementing elasticity based on traffic prediction with EHPA

- Implementing Horizontal Scaling based on CLB monitoring metrics using KEDA in TKE

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- Security Vulnerability Fix Description

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Discontinuing TKE API 2.0

- Instructions on Cluster Resource Quota Adjustment

- Discontinuing Kubernetes v1.14 and Earlier Versions

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Kubernetes API Operation Guide

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Deleting a Cluster

- Cluster Scaling

- Changing the Cluster Operating System

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Enabling GPU Scheduling for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Native Node Parameters

- Creating Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling SSH Key Login for a Native Node

- Management Parameters

- Enabling Public Network Access for a Native Node

- Supernode management

- Registered Node Management

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- Job Management

- CronJob Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Service Management

- Ingress Management

- Storage Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- CBS-CSI Description

- UserGroupAccessControl

- COS-CSI

- CFS-CSI

- P2P

- OOMGuard

- TCR Introduction

- TCR Hosts Updater

- DNSAutoscaler

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- Network Policy

- DynamicScheduler

- DeScheduler

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- GPU-Manager Add-on

- CFSTURBO-CSI

- tke-log-agent

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Cloud Native Monitoring

- Remote Terminals

- Policy Management

- TKE Serverless Cluster Guide

- TKE Edge Cluster Guide

- TKE Registered Cluster Guide

- TKE Container Instance Guide

- Cloud Native Service Guide

- Best Practices

- Cluster

- Cluster Migration

- Serverless Cluster

- Edge Cluster

- Security

- Service Deployment

- Hybrid Cloud

- Network

- DNS

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Implementing elasticity based on traffic prediction with EHPA

- Implementing Horizontal Scaling based on CLB monitoring metrics using KEDA in TKE

- Storage

- Containerization

- Microservice

- Cost Management

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- Engel Ingres appears in Connechtin Reverside

- CLB Loopback

- CLB Ingress Creation Error

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Cluster APIs

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateCluster

- UpdateClusterVersion

- UpdateClusterKubeconfig

- DescribeBackupStorageLocations

- DeleteBackupStorageLocation

- CreateBackupStorageLocation

- Add-on APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- DescribeTKEEdgeScript

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeClusters

- DescribeTKEEdgeClusterStatus

- DescribeTKEEdgeClusterCredential

- DescribeEdgeClusterInstances

- DescribeEdgeCVMInstances

- DescribeECMInstances

- DescribeAvailableTKEEdgeVersion

- DeleteTKEEdgeCluster

- DeleteEdgeClusterInstances

- DeleteEdgeCVMInstances

- DeleteECMInstances

- CreateTKEEdgeCluster

- CreateECMInstances

- CheckEdgeClusterCIDR

- ForwardTKEEdgeApplicationRequestV3

- UninstallEdgeLogAgent

- InstallEdgeLogAgent

- DescribeEdgeLogSwitches

- CreateEdgeLogConfig

- CreateEdgeCVMInstances

- UpdateEdgeClusterVersion

- DescribeEdgeClusterUpgradeInfo

- Cloud Native Monitoring APIs

- Virtual node APIs

- Other APIs

- Scaling group APIs

- Data Types

- Error Codes

- API Mapping Guide

- TKE Insight

- TKE Scheduling

- FAQs

- Service Agreement

- Contact Us

- Purchase Channels

- Glossary

- User Guide(Old)

Overview

Registered nodes (third-party nodes) are a newly upgraded node product form of Tencent Kubernetes Engine (TKE) in hybrid cloud deployment. It allows users to host non-Tencent Cloud servers in the TKE cluster. Users provide computing resources while TKE is responsible for the lifecycle management of the cluster.

Note:

Registered nodes now support two product modes: the Direct Connect (DC) version (connected through DC and Cloud Connect Network (CCN)) and the public network version (connected through the Internet). Users can choose the proper version according to different scenarios as needed.

Use Cases

Resource Reuse

An enterprise needs to migrate to the cloud. However, it has investment in local data centers, and has existing server resources (CPU resources and GPU resources) in the Internet data center (IDC). By using the feature of Registered Node, users can add IDC resources to the TKE cluster, so that the existing server resources can be effectively utilized during cloudification.

Cluster Hosting and OPS

Tencent Cloud conducts unified OPS and control on the local deployment and OPS cost of Kubernetes clusters, so that users only need to maintain their local servers.

Hybrid Deployment Scheduling

Both registered nodes and Tencent Cloud Cloud Virtual Machine (CVM) nodes can be simultaneously scheduled within a single cluster, facilitating the expansion of IDC services to CVM without the need to introduce multi-cluster management.

Seamless Integration with Cloud Services

Registered nodes seamlessly integrate with cloud-native services of Tencent Cloud, covering cloud-native capabilities such as logs, monitoring, auditing, storage, and container security.

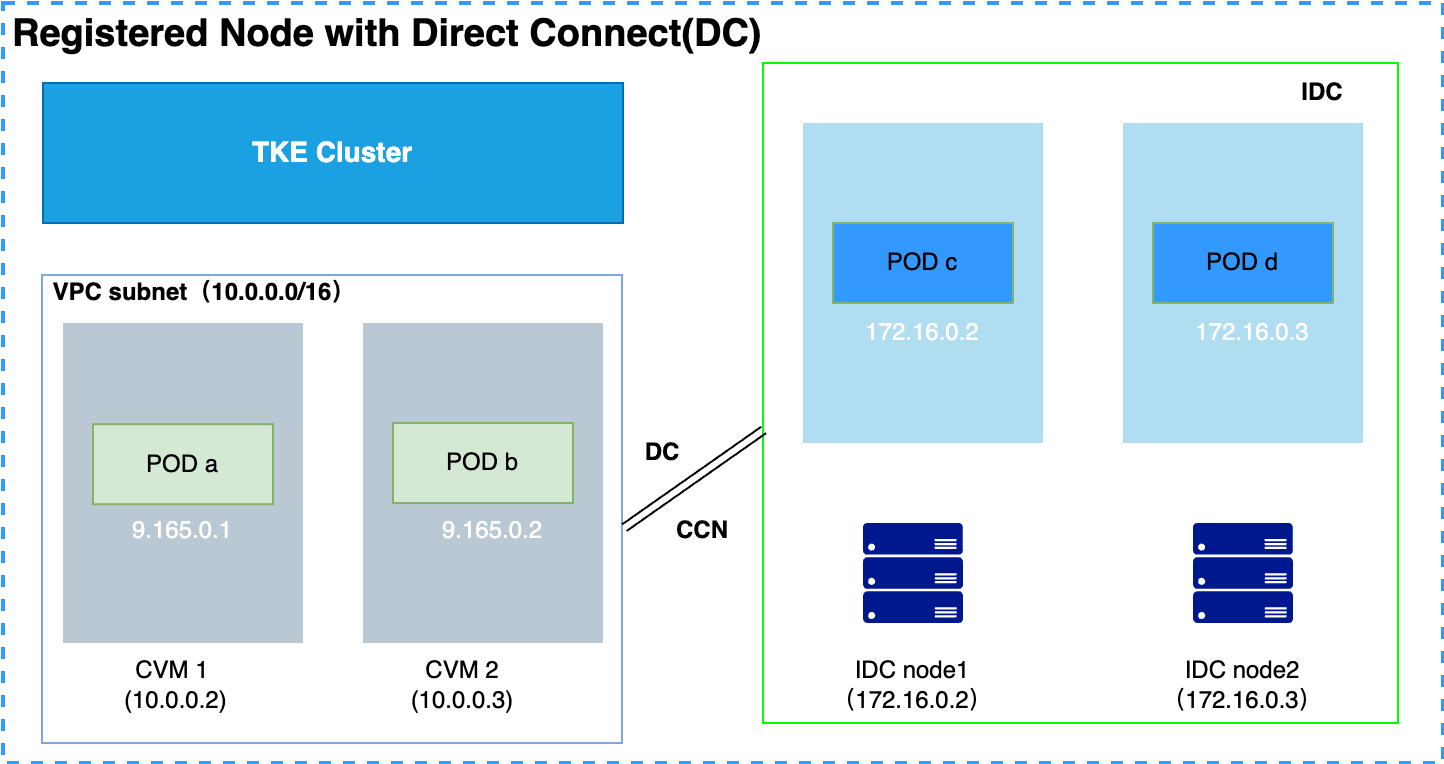

Registered Nodes with DC

Architecture

Users can connect their own IDC environment to Tencent Cloud Virtual Private Cloud (VPC) through DC and CCN, and then connect the IDC nodes to the TKE cluster through the private network, achieving unified management of IDC nodes and cloud-based CVM nodes. The architecture diagram is as follows:

Constraints

To ensure the stability of registered nodes, users can access registered nodes only through DC or CCN (the virtual private network (VPN) is currently not supported).

Registered nodes must use TencentOS Server 3.1 or TencentOS Server 2.4 (TK4).

Graphics processing unit (GPU): Only NVIDIA series of GPUs are supported, including Volta (such as V100), Turing (such as T4), and Ampere (such as A100 and A10).

The registered node feature is only available for TKE clusters of v1.18 or later versions and the cluster must contain at least one CVM node.

For scenarios where CVM nodes and IDC nodes are deployed, the TKE team has launched a hybrid cloud container network scheme based on Cilium-Overlay.

Port Connectivity Configuration of Nodes

To ensure the connectivity between CVM nodes and IDC nodes in a hybrid cloud cluster, a series of ports need to be configured on the CVM nodes and IDC nodes.

CVM nodes: CVM nodes must use the security group settings that meet TKE requirements. If the TKE cluster uses the Cilium-Overlay network mode, additional security group rules need to be added.

Inbound rule

Protocol | Port | Source | Policy | Remarks |

UDP | 8472 | Cluster CIDR IDC CIDR | Allow | VXLAN communication is allowed for cluster nodes. |

Outbound rule

Protocol | Port | Destination | Policy | Remarks |

UDP | 8472 | Cluster CIDR IDC CIDR | Allow | VXLAN communication is allowed for cluster nodes. |

IDC nodes: Configure ports on nodes for firewall rules.

Inbound rule

Protocol | Port | Source | Policy | Remarks |

UDP | 8472 | Cluster CIDR

IDC CIDR | Allow | VXLAN communication is allowed for cluster nodes. |

TCP | 10250 | VXLAN communication is allowed for cluster nodes. | Allow | API server communication is allowed. |

Outbound rule

Protocol | Port | Destination | Policy | Remarks |

UDP | 8472 | Cluster CIDR

IDC CIDR | Allow | VXLAN communication is allowed for cluster nodes. |

TCP | 80, 443, 9243, 10250, 60002 | VPC subnet CIDR with proxy | Allow | Tencent Cloud proxy communication is allowed. |

Network Mode

For different network modes of TKE clusters, there are certain limitations of pod network capabilities as follows:

For clusters using the GlobalRouter or VPC-CNI exclusive ENI mode: Pods on IDC nodes can only use the hostNetwork network mode. In this mode, pods of IDC nodes can communicate with CVM nodes only through the host network.

For clusters using the Cilium-Overlay network mode: This is a container network solution specially designed by the TKE team for hybrid cloud scenarios. Both CVM pods and IDC pods are on the same overlay network plane and can communicate with each other.

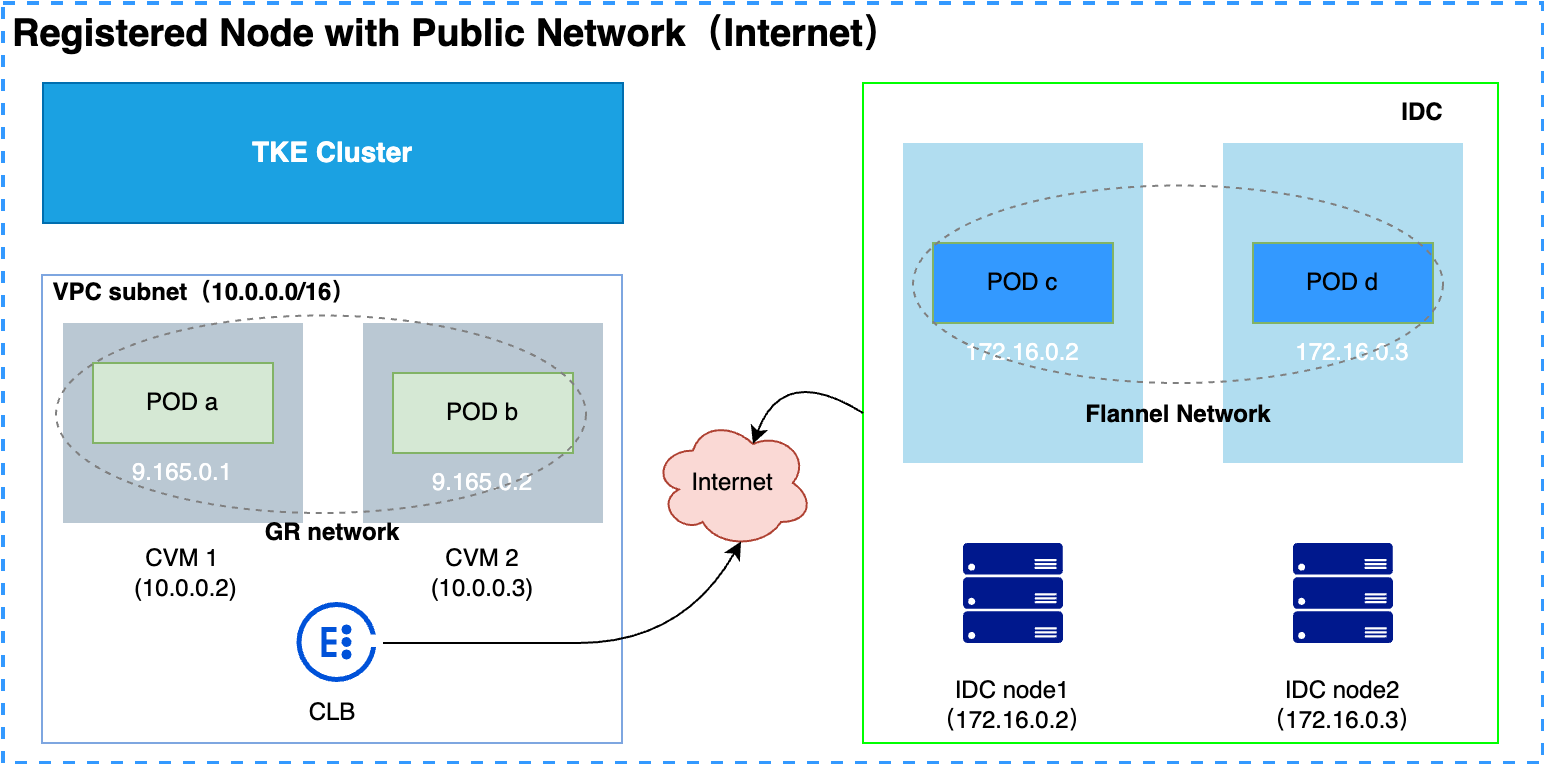

Registered Node with Public Network (Internet Version)

Architecture

If a user fails to establish DC between the IDC and Tencent Cloud due to certain objective factors, but still wants to manage the IDC nodes through TKE to reduce the deployment and OPS cost of Kubernetes, the user can use the registered nodes of the public network version to register the IDC nodes to TKE for unified management over the Internet. The architecture diagram is as follows:

Note:

Unlike the DC version, the public network version can only communicate with TKE through the Internet. By default, the CVM nodes and IDC nodes are two completely isolated partitions, and the pods in CVM nodes cannot communicate with pods in IDC nodes through networks. Therefore, it is recommended that users manage and schedule IDC nodes as a separate node pool to prevent communication between CVM pods and IDC pods.

Constraints

Before using registered nodes of the public network version, it is necessary to ensure that the environment meets the constraint requirements. Otherwise, the product features may be abnormal.

Operating system: Registered nodes of the public network version must use TencentOS Server 3.1 and TencentOS Server 2.4 (TK4).

TKE cluster:

The Kubernetes version must be 1.20 or later.

Set "Container network add-on" to Global Router.

There must be at least one CVM node.

Network: IDC nodes can communicate with Tencent Cloud Cloud Load Balancer (CLB) and can access TCP ports 443 and 9000 of CLB.

Hardware (GPU): GPU nodes are currently not supported.

Port Connectivity Configuration of Nodes

To ensure the connectivity between the Tencent Cloud and IDC through Internet, a series of ports need to be configured on the CVM nodes and IDC nodes.

CVM nodes: CVM nodes must use the security group settings that meet TKE requirements. If the TKE cluster uses the Cilium-Overlay network mode, additional security group rules need to be added.

IDC nodes: Configure ports on nodes for firewall rules to allow access to ports and also configure rules to allow access to the public network image repository.

Image repository: Ensure that ccr.ccs.tencentyun.com and superedge.tencentcloudcr.com can be accessed on IDC nodes.

Inbound rule

Protocol | Port | Source | Policy | Remarks |

UDP | 8472 | Cluster CIDR

IDC CIDR | Allow | VXLAN communication is allowed for cluster nodes. |

TCP | 10250 | VXLAN communication is allowed for cluster nodes. | Allow | API server communication is allowed. |

Outbound rule

Protocol | Port | Destination | Policy | Remarks |

UDP | 8472 | Cluster CIDR

IDC CIDR | Allow | VXLAN communication is allowed for cluster nodes. |

TCP | 443, 9000 | IP address of CLB | Allow | The CLB address can be accessed to provide the node registration and cloud-edge tunnel services. |

Network Mode

For the public network version of registered nodes, the network between CVM and IDC are naturally isolated. Therefore, CVM nodes use the Global Router (GR) network to achieve pod communication between CVM nodes, while the IDC nodes use the Flannel network to achieve pod communication between IDC nodes. By default, CVM pods are isolated from IDC pods.

Comparison of Capabilities Between Registered Nodes and TKE Nodes

Class | Capability | TKE Node | Registered Node (DC) | Registered Node (Public Network) |

Node management | Node adding | ✔ | ✔ | ✔ |

| Node removal | ✔ | ✔ | ✔ |

| Setting of node tags and taints | ✔ | ✔ | ✔ |

| Node draining and cordoning | ✔ | ✔ | ✔ |

| Batch node management in the node pool | ✔ | ✔ | ✔ |

| Kubernetes upgrade | ✔ | Partial support | Partial support |

Storage volume | Local storage (emptyDir, hostPath, etc) | ✔ | ✔ | ✔ |

| Kubernetes API (ConfigMap, Secret, etc) | ✔ | ✔ | ✔ |

| Cloud Block Storage (CBS) | ✔ | - | - |

| Cloud File Storage (CFS) | ✔ | ✔ | - |

| Cloud Object Storage (COS) | ✔ | ✔ | - |

Observability | Prometheus monitoring | ✔ | ✔ | - |

| Cloud product monitoring | ✔ | - | - |

| CLS | ✔ | ✔ | - |

| Cluster audit | ✔ | ✔ | ✔ |

| Event storage | ✔ | ✔ | ✔ |

Service | ClusterIP service | ✔ | ✔ | ✔ |

| NodePort service | ✔ | ✔ | ✔ |

| LoadBalancer service | ✔ | ✔ | - |

| CLB ingress | ✔ | ✔ | - |

| Nginx ingress | ✔ | ✔ | - |

Others | qGPU | ✔ | - | - |

Yes

Yes

No

No

Was this page helpful?