- 动态与公告

- 产品简介

- 购买指南

- 快速入门

- 操作指南

- CKafka 连接器

- 实践教程

- 故障处理

- API 文档

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- DescribeTopicProduceConnection

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK 文档

- 通用参考

- 常见问题

- 服务等级协议

- 联系我们

- 词汇表

- 动态与公告

- 产品简介

- 购买指南

- 快速入门

- 操作指南

- CKafka 连接器

- 实践教程

- 故障处理

- API 文档

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- DescribeTopicProduceConnection

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK 文档

- 通用参考

- 常见问题

- 服务等级协议

- 联系我们

- 词汇表

Spark Streaming 是 Spark Core 的一个扩展,用于高吞吐且容错地处理持续性的数据,目前支持的外部输入有 Kafka、Flume、HDFS/S3、Kinesis、Twitter 和 TCP socket。

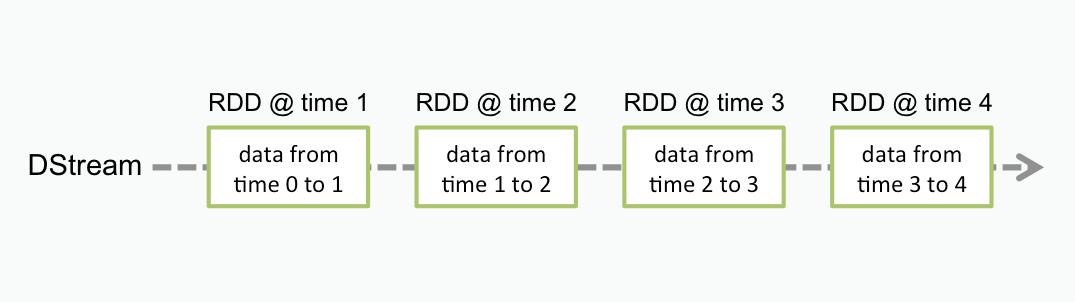

Spark Streaming 将连续数据抽象成 DStream(Discretized Stream),而 DStream 由一系列连续的 RDD(弹性分布式数据集)组成,每个 RDD 是一定时间间隔内产生的数据。使用函数对 DStream 进行处理其实即为对这些 RDD 进行处理。

使用 Spark Streaming 作为 Kafka 的数据输入时,可支持 Kafka 稳定版本与实验版本:

Kafka Version | spark-streaming-kafka-0.8 | spark-streaming-kafka-0.10 |

Broker Version | 0.8.2.1 or higher | 0.10.0 or higher |

Api Maturity | Deprecated | Stable |

Language Support | Scala、Java、Python | Scala、Java |

Receiver DStream | Yes | No |

Direct DStream | Yes | Yes |

SSL / TLS Support | No | Yes |

Offset Commit Api | No | Yes |

Dynamic Topic Subscription | No | Yes |

目前 CKafka 兼容 0.9及以上的版本,本次实践使用 0.10.2.1 版本的 Kafka 依赖。

操作步骤

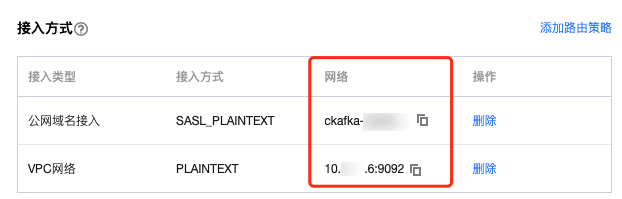

步骤1:获取 CKafka 实例接入地址

1. 登录 CKafka 控制台。

2. 在左侧导航栏选择实例列表,单击实例的“ID”,进入实例基本信息页面。

3. 在实例的基本信息页面的接入方式模块,可获取实例的接入地址,接入地址是生产消费需要用到的 bootstrap-server。

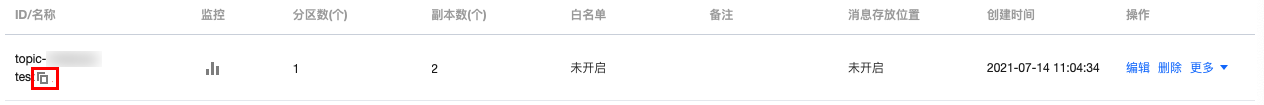

步骤2:创建 Topic

1. 在实例基本信息页面,选择顶部Topic管理页签。

2. 在 Topic 管理页面,单击新建,创建一个名为 test 的 Topic,接下来将以该 Topic 为例介绍如何生产消费。

步骤3:准备云服务器环境

Centos6.8 系统

package | version |

sbt | 0.13.16 |

hadoop | 2.7.3 |

spark | 2.1.0 |

protobuf | 2.5.0 |

ssh | CentOS 默认安装 |

Java | 1.8 |

步骤4:对接 CKafka

这里使用 0.10.2.1 版本的 Kafka 依赖。

1. 在

build.sbt 添加依赖:name := "Producer Example"version := "1.0"scalaVersion := "2.11.8"libraryDependencies += "org.apache.kafka" % "kafka-clients" % "0.10.2.1"

2. 配置

producer_example.scala:import java.util.Propertiesimport org.apache.kafka.clients.producer._object ProducerExample extends App {val props = new Properties()props.put("bootstrap.servers", "172.16.16.12:9092") //实例信息中的内网 IP 与端口props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer")props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer")val producer = new KafkaProducer[String, String](props)val TOPIC="test" //指定要生产的 Topicfor(i<- 1 to 50){val record = new ProducerRecord(TOPIC, "key", s"hello $i") //生产 key 是"key",value 是 hello i 的消息producer.send(record)}val record = new ProducerRecord(TOPIC, "key", "the end "+new java.util.Date)producer.send(record)producer.close() //最后要断开}

DirectStream

1. 在

build.sbt 添加依

赖:name := "Consumer Example"version := "1.0"scalaVersion := "2.11.8"libraryDependencies += "org.apache.spark" %% "spark-core" % "2.1.0"libraryDependencies += "org.apache.spark" %% "spark-streaming" % "2.1.0"libraryDependencies += "org.apache.spark" %% "spark-streaming-kafka-0-10" % "2.1.0"

2. 配置

DirectStream_example.scala:import org.apache.kafka.clients.consumer.ConsumerRecordimport org.apache.kafka.common.serialization.StringDeserializerimport org.apache.kafka.common.TopicPartitionimport org.apache.spark.streaming.kafka010._import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistentimport org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribeimport org.apache.spark.streaming.kafka010.KafkaUtilsimport org.apache.spark.streaming.kafka010.OffsetRangeimport org.apache.spark.streaming.{Seconds, StreamingContext}import org.apache.spark.SparkConfimport org.apache.spark.SparkContextimport collection.JavaConversions._import Array._object Kafka {def main(args: Array[String]) {val kafkaParams = Map[String, Object]("bootstrap.servers" -> "172.16.16.12:9092","key.deserializer" -> classOf[StringDeserializer],"value.deserializer" -> classOf[StringDeserializer],"group.id" -> "spark_stream_test1","auto.offset.reset" -> "earliest","enable.auto.commit" -> "false")val sparkConf = new SparkConf()sparkConf.setMaster("local")sparkConf.setAppName("Kafka")val ssc = new StreamingContext(sparkConf, Seconds(5))val topics = Array("spark_test")val offsets : Map[TopicPartition, Long] = Map()for (i <- 0 until 3){val tp = new TopicPartition("spark_test", i)offsets.updated(tp , 0L)}val stream = KafkaUtils.createDirectStream[String, String](ssc,PreferConsistent,Subscribe[String, String](topics, kafkaParams))println("directStream")stream.foreachRDD{ rdd=>//输出获得的消息rdd.foreach{iter =>val i = iter.valueprintln(s"${i}")}//获得offsetval offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRangesrdd.foreachPartition { iter =>val o: OffsetRange = offsetRanges(TaskContext.get.partitionId)println(s"${o.topic} ${o.partition} ${o.fromOffset} ${o.untilOffset}")}}// Start the computationssc.start()ssc.awaitTermination()}}

RDD

1. 配置

build.sbt(配置同上,单击查看)。2. 配置

RDD_example:import org.apache.kafka.clients.consumer.ConsumerRecordimport org.apache.kafka.common.serialization.StringDeserializerimport org.apache.spark.streaming.kafka010._import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistentimport org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribeimport org.apache.spark.streaming.kafka010.KafkaUtilsimport org.apache.spark.streaming.kafka010.OffsetRangeimport org.apache.spark.streaming.{Seconds, StreamingContext}import org.apache.spark.SparkConfimport org.apache.spark.SparkContextimport collection.JavaConversions._import Array._object Kafka {def main(args: Array[String]) {val kafkaParams = Map[String, Object]("bootstrap.servers" -> "172.16.16.12:9092","key.deserializer" -> classOf[StringDeserializer],"value.deserializer" -> classOf[StringDeserializer],"group.id" -> "spark_stream","auto.offset.reset" -> "earliest","enable.auto.commit" -> (false: java.lang.Boolean))val sc = new SparkContext("local", "Kafka", new SparkConf())val java_kafkaParams : java.util.Map[String, Object] = kafkaParams//按顺序向 parition 拉取相应 offset 范围的消息,如果拉取不到则阻塞直到超过等待时间或者新生产消息达到拉取的数量val offsetRanges = Array[OffsetRange](OffsetRange("spark_test", 0, 0, 5),OffsetRange("spark_test", 1, 0, 5),OffsetRange("spark_test", 2, 0, 5))val range = KafkaUtils.createRDD[String, String](sc,java_kafkaParams,offsetRanges,PreferConsistent)range.foreach(rdd=>println(rdd.value))sc.stop()}}

配置环境

安装 sbt

1. 在 sbt 官网 上下载 sbt 包。

2. 解压后在 sbt 的目录下创建一个 sbt_run.sh 脚本并增加可执行权限,脚本内容如下:

#!/bin/bashSBT_OPTS="-Xms512M -Xmx1536M -Xss1M -XX:+CMSClassUnloadingEnabled -XX:MaxPermSize=256M"java $SBT_OPTS -jar `dirname $0`/bin/sbt-launch.jar "$@"

chmod u+x ./sbt_run.sh

3. 执行以下命令。

./sbt-run.sh sbt-version

若能看到 sbt 版本说明可以正常运行。

安装 protobuf

1. 下载 protobuf 相应版本。

2. 解压后进入目录。

./configuremake && make install

需要预先安装 gcc-g++,执行中可能需要 root 权限。

3. 重新登录,在命令行中输入下述内容。

protoc --version

4. 若能看到 protobuf 版本说明可以正常运行。

安装 Hadoop

1. 访问 Hadoop 官网 下载所需要的版本。

2. 增加 Hadoop 用户。

useradd -m hadoop -s /bin/bash

3. 增加管理员权限。

visudo

4. 在

root ALL=(ALL) ALL下增加一行。hadoop ALL=(ALL) ALL保存退出。5. 使用 Hadoop 进行操作。

su hadoop

6. SSH 无密码登录。

cd ~/.ssh/ # 若没有该目录,请先执行一次ssh localhostssh-keygen -t rsa # 会有提示,都按回车就可以cat id_rsa.pub >> authorized_keys # 加入授权chmod 600 ./authorized_keys # 修改文件权限

7. 安装 Java。

sudo yum install java-1.8.0-openjdk java-1.8.0-openjdk-devel

8. 配置 ${JAVA_HOME}。

vim /etc/profile

在文末加上下述内容:

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.121-0.b13.el6_8.x86_64/jreexport PATH=$PATH:$JAVA_HOME

根据安装情况修改对应路径。

9. 解压 Hadoop,进入目录。

./bin/hadoop version

若能显示版本信息说明能正常运行。

10. 配置单机伪分布式(可根据需要搭建不同形式的集群)。

vim /etc/profile

在文末加上下述内容:

export HADOOP_HOME=/usr/local/hadoopexport PATH=$HADOOP_HOME/bin:$PATH

根据安装情况修改对应路径。

11. 修改

/etc/hadoop/core-site.xml。<configuration><property><name>hadoop.tmp.dir</name><value>file:/usr/local/hadoop/tmp</value><description>Abase for other temporary directories.</description></property><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property></configuration>

12. 修改

/etc/hadoop/hdfs-site.xml。<configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.namenode.name.dir</name><value>file:/usr/local/hadoop/tmp/dfs/name</value></property><property><name>dfs.datanode.data.dir</name><value>file:/usr/local/hadoop/tmp/dfs/data</value></property></configuration>

13. 修改

/etc/hadoop/hadoop-env.sh 中的 JAVA_HOME 为 Java 的路径。export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.121-0.b13.el6_8.x86_64/jre

14. 执行 NameNode 格式化。

./bin/hdfs namenode -format

显示

Exitting with status 0则表示成功。15. 启动 Hadoop。

./sbin/start-dfs.sh

成功启动会存在

NameNode 进程,DataNode 进程,SecondaryNameNode 进程。安装 Spark

说明:

本示例同样使用

hadoop 用户进行操作。1. 解压进入目录。

2. 修改配置文件。

cp ./conf/spark-env.sh.template ./conf/spark-env.shvim ./conf/spark-env.sh

在第一行添加下述内容:

export SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

根据 hadoop 安装情况修改路径。

3. 运行示例。

bin/run-example SparkPi

若成功安装可以看到程序输出 π 的近似值。

是

是

否

否

本页内容是否解决了您的问题?