- 动态与公告

- 产品简介

- 购买指南

- 快速入门

- 操作指南

- CKafka 连接器

- 实践教程

- 故障处理

- API 文档

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- DescribeTopicProduceConnection

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK 文档

- 通用参考

- 常见问题

- 服务等级协议

- 联系我们

- 词汇表

- 动态与公告

- 产品简介

- 购买指南

- 快速入门

- 操作指南

- CKafka 连接器

- 实践教程

- 故障处理

- API 文档

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- DescribeTopicProduceConnection

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK 文档

- 通用参考

- 常见问题

- 服务等级协议

- 联系我们

- 词汇表

操作场景

本文介绍使用 Node.js 客户端连接 CKafka 弹性 Topic 并收发消息的操作步骤。

前提条件

安装 GCC

操作步骤

步骤1:准备环境

安装 C++依赖库

1. 执行以下命令切换到 yum 源配置目录

/etc/yum.repos.d/。cd /etc/yum.repos.d/

2. 创建 yum 源配置文件 confluent.repo。

[Confluent.dist]name=Confluent repository (dist)baseurl=https://packages.confluent.io/rpm/5.1/7gpgcheck=1gpgkey=https://packages.confluent.io/rpm/5.1/archive.keyenabled=1[Confluent]name=Confluent repositorybaseurl=https://packages.confluent.io/rpm/5.1gpgcheck=1gpgkey=https://packages.confluent.io/rpm/5.1/archive.keyenabled=1

3. 执行以下命令安装 C++ 依赖库。

yum install librdkafka-devel

安装 Node.js 依赖库

1. 执行以下命令为预处理器指定 OpenSSL 头文件路径。

export CPPFLAGS=-I/usr/local/opt/openssl/include

2. 执行以下命令为连接器指定 OpenSSL 库路径。

export LDFLAGS=-L/usr/local/opt/openssl/lib

3. 执行以下命令安装 Node.js 依赖库。

npm install i --unsafe-perm node-rdkafka

步骤2:创建 Topic 和订阅关系

1. 在控制台的弹性 Topic 列表页面创建一个 Topic。

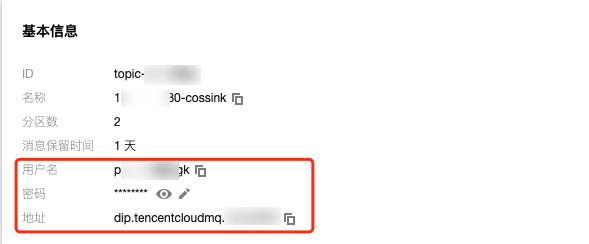

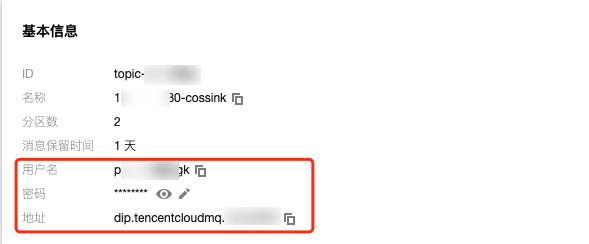

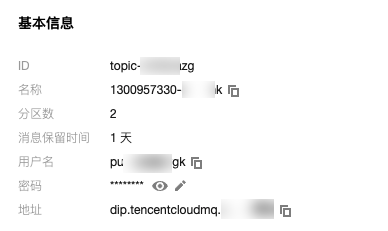

2. 单击 Topic 的 “ID” 进入基本信息页面,获取用户名、密码和地址信息。

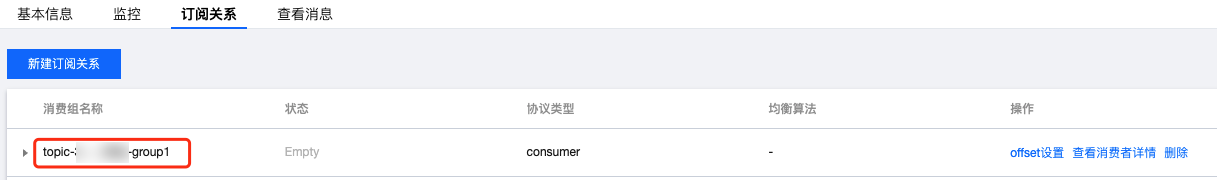

3. 在订阅关系页签,新建一个订阅关系(消费组)。

步骤2:添加配置文件

module.exports = {'sasl_plain_username': 'your_user_name','sasl_plain_password': 'your_user_password','bootstrap_servers': ["xxx.xx.xx.xx:port"],'topic_name': 'xxx','group_id': 'xxx'}

参数 | 描述 |

bootstrapServers | 接入地址,在控制台的弹性 Topic 基本信息页面获取。  |

sasl_plain_username | 用户名,在控制台的弹性 Topic 基本信息页面获取。 |

sasl_plain_password | 用户密码,在控制台的弹性 Topic 基本信息页面获取。 |

topic_name | Topic 名称,在控制台的弹性 Topic 基本信息页面获取。 |

group.id | 消费组名称,在控制台的弹性 Topic 的订阅关系列表获取。  |

步骤3:生产消息

1. 编写生产消息程序 producer.js

const Kafka = require('node-rdkafka');const config = require('./setting');console.log("features:" + Kafka.features);console.log(Kafka.librdkafkaVersion);var producer = new Kafka.Producer({'api.version.request': 'true','bootstrap.servers': config['bootstrap_servers'],'dr_cb': true,'dr_msg_cb': true,'security.protocol' : 'SASL_PLAINTEXT','sasl.mechanisms' : 'PLAIN','sasl.username' : config['sasl_plain_username'],'sasl.password' : config['sasl_plain_password']});var connected = falseproducer.setPollInterval(100);producer.connect();producer.on('ready', function() {connected = trueconsole.log("connect ok")});function produce() {try {producer.produce(config['topic_name'],new Buffer('Hello CKafka SASL'),null,Date.now());} catch (err) {console.error('Error occurred when sending message(s)');console.error(err);}}producer.on("disconnected", function() {connected = false;producer.connect();})producer.on('event.log', function(event) {console.log("event.log", event);});producer.on("error", function(error) {console.log("error:" + error);});producer.on('delivery-report', function(err, report) {console.log("delivery-report: producer ok");});// Any errors we encounter, including connection errorsproducer.on('event.error', function(err) {console.error('event.error:' + err);})setInterval(produce,1000,"Interval");

2. 执行以下命令发送消息。

node producer.js

3. 查看运行结果。

步骤4:消费消息

1. 创建消费消息程序 consumer.js。

consumer.on('event.log', function(event) {console.log("event.log", event);});consumer.on('error', function(error) {console.log("error:" + error);});consumer.on('event', function(event) {console.log("event:" + event);});const Kafka = require('node-rdkafka');const config = require('./setting');console.log(Kafka.features);console.log(Kafka.librdkafkaVersion);console.log(config)var consumer = new Kafka.KafkaConsumer({'api.version.request': 'true','bootstrap.servers': config['bootstrap_servers'],'security.protocol' : 'SASL_PLAINTEXT','sasl.mechanisms' : 'PLAIN','message.max.bytes': 32000,'fetch.message.max.bytes': 32000,'max.partition.fetch.bytes': 32000,'sasl.username' : config['sasl_plain_username'],'sasl.password' : config['sasl_plain_password'],'group.id' : config['group_id']});consumer.connect();consumer.on('ready', function() {console.log("connect ok");consumer.subscribe([config['topic_name']]);consumer.consume();})consumer.on('data', function(data) {console.log(data);});consumer.on('event.log', function(event) {console.log("event.log", event);});consumer.on('error', function(error) {console.log("error:" + error);});consumer.on('event', function(event) {console.log("event:" + event);});

2. 执行以下命令消费消息。

node consumer.js

3. 查看运行结果。

是

是

否

否

本页内容是否解决了您的问题?