|

Cluster health | ES cluster health status. 0: green (the cluster is normal); 1: yellow (alarm; some replica shards are unavailable); 2: red (exception; some primary shards are unavailable). | Green indicates that all primary and replica shards are available and the cluster is in the healthiest status. Yellow indicates that all the primary shards are available, but some replica shards are unavailable. In this case, the search results are still complete; however, the high availability of the cluster is affected to some extent, and there are high risks with data loss. When the cluster health status changes to yellow, you should locate and troubleshoot the problem in a timely manner to prevent data loss. Red indicates that at least one primary shard and all its replicas are unavailable. When the cluster health status changes to red, some data has already been lost, the search can only return partial data, and the write requests allocated to a lost shard will return an exception. In this case, you should locate and troubleshoot the exceptional shard as soon as possible. |

Avg disk usage | The average of disk utilization values of all nodes in the cluster in one statistical period (1 minute). | If the disk utilization is too high, data cannot be written properly. Solution: Clean up useless indices promptly. Expand the cluster capacity by increasing the disk capacity of individual nodes or increasing the number of nodes. |

Max disk utilization | The maximum disk utilization value of all nodes in the cluster in one statistical period (1 minute). | - |

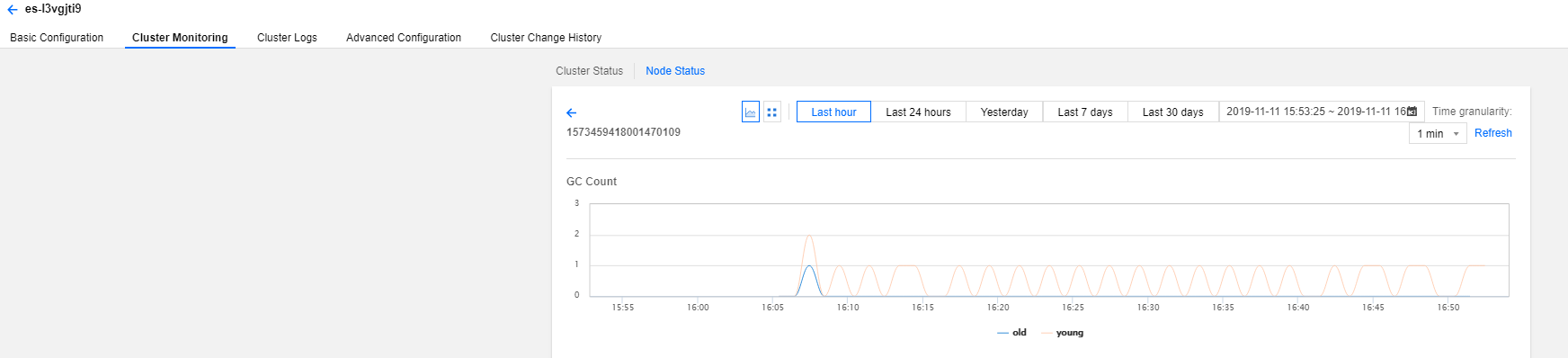

Avg JVM memory utilization | The average of JVM memory utilization values of all nodes in the cluster in one statistical period (1 minute). | If this value is too high, frequent GC or even OOM will occur on cluster nodes. This happens generally because the tasks to be processed by ES exceed the load capacity of the nodes' JVMs. You need to pay attention to the tasks that are being executed by the cluster or adjust the cluster configuration. |

Max JVM memory utilization | The maximum JVM memory utilization value of all nodes in the cluster in one statistical period (1 minute). | - |

Avg CPU utilization | The average of CPU utilization values of all nodes in the cluster in one statistical period (1 minute). | When the read/write tasks processed by the nodes in the cluster exceed the load capacity of the nodes' CPUs, the value of this metric will become too high. In this case, the cluster nodes will experience a decrease in processing power or even crash. You can solve this problem in the following ways: Observe whether the value of this metric is persistently or temporarily high. If it is temporarily soaring, determine whether there are temporary complex tasks in progress. If it is persistently high, analyze whether the read/write operations on the cluster by your business can be optimized, lower the read/write frequency, and decrease the amount of data so as to reduce the node load. If the node configuration cannot meet the throughput requirement of your business, you are recommended to perform vertical scaling of the cluster nodes to improve the load capacity of individual nodes. |

Max CPU utilization | The maximum CPU utilization value of all nodes in the cluster in one statistical period (1 minute). | - |

Avg cluster load per minute | The average load per minute (load_1m) of all nodes in the cluster. Source of the metric: ES node status API (_nodes/stats/os/cpu/load_average/1m). | If load_1m is too high, you are recommended to lower the cluster load or upgrade the cluster node specification. |

Max cluster load per minute | The maximum load per minute (load_1m) of all nodes in the cluster. | - |

Avg write latency | Write latency (index_latency) refers to the time taken by a single index request (ms/request). The average write latency of the cluster is the average of the time taken by a single index request of all nodes in one statistical period (1 minute). Calculation rule for the single index request time of a node: two metrics are recorded once every statistical period (1 minute), i.e., total number of historical indices on a node (_nodes/stats/indices/indexing/index_total) and total time taken by historical indices (_nodes/stats/indices/indexing/index_time_in_millis), and the difference between two adjacent records (i.e., the absolute value in one statistical period) is taken for calculation (index time / number of indices) to get the average single index time in one statistical period (1 minute). | Write latency is the average time it takes to write a single document. The average write latency of the cluster refers to the average of write time of all nodes in one statistical period. If the write latency is too high, you are recommended to upgrade the node specification or increase the number of nodes. |

Max write latency | Write latency (index_latency) refers to the time taken by a single index request (ms/request). The maximum write latency of the cluster is the maximum value of time taken by a single index request of all nodes in one statistical period (1 minute). Calculation rule for single index request time of a node: see the average write latency section. | - |

Avg query latency | Query latency (search_latency) refers to the time taken by a single query request (ms/request). The average query latency of the cluster is the average of the time taken by a single query request of all nodes in one statistical period (1 minute). Calculation rule for the single query request time of a node: two metrics are recorded once every statistical period (1 minute), i.e., total number of historical queries on a node (_nodes/stats/indices/search/query_total) and total time taken by historical queries (_nodes/stats/indices/search/query_time_in_millis), and the difference between two adjacent records (i.e., the absolute value in one statistical period) is taken for calculation (query time / number of queries) to get the average single query time in one statistical period (1 minute). | Query latency is the average time it takes to perform a single query. The average query latency of the cluster refers to the average of query time of all nodes in one statistical period. If the query latency is too high, you are recommended to upgrade the node specification or increase the number of nodes. |

Max query latency |

Query latency (search_latency) refers to the time taken by a single query request (ms/request). The maximum query latency of the cluster is the maximum value of time taken by a single query request of all nodes in one statistical period (1 minute). Calculation rule for single query request time of a node: see the average query latency section. | - |

Avg number of writes per second | The average of the number of index requests received by all nodes in the cluster per second. Calculation rule for the number of index requests per second of a node: the total number of historical indices on a node (_nodes/stats/indices/indexing/index_total) is recorded once every statistical period (1 minute), and the difference between two adjacent records (i.e., the absolute value in one statistical period) is taken for calculation (number of indices / 60 seconds) to get the average number of index requests per second in one statistical period. | - |

Avg number of queries per second | The average of the number of query requests received by all nodes in the cluster per second. Calculation rule for the number of query requests per second of a node: the total number of historical queries on a node (_nodes/stats/indices/search/query_total) is recorded once every statistical period (1 minute), and the difference between two adjacent records (i.e., the absolute value in one statistical period) is taken for calculation (number of queries / 60 seconds) to get the average number of query requests per second in one statistical period. | - |

Write rejection rate | This is the ratio calculated by dividing the number of write requests rejected by the cluster by the total number of write requests in one statistical period. Calculation rule: two metrics are collected once every statistical period, i.e., the number of historical write requests rejected (v5.6.4: _nodes/stats/thread_pool/bulk/rejected; v6.4.3 and above: _nodes/stats/thread_pool/write/rejected) and the total number of historical write requests (v5.6.4: _nodes/stats/thread_pool/bulk/completed; v6.4.3 and above: _nodes/stats/thread_pool/write/completed), and the difference between two adjacent records (i.e., the absolute value in one statistical period) is taken for calculation (number of rejected write requests / total number of write requests). | When the write QPS is too large or the CPU, memory, and disk utilization is too high, the cluster's write rejection rate may increase. Generally, this is because that the current configuration of the cluster cannot meet the requirements of write operations on the business side. For scenarios where the node configuration is too low, you can solve this problem by upgrading the node specification or reducing the number of write operations. For scenarios where the disk utilization is too high, you can solve this problem by expanding the cluster's disk capacity or deleting useless data. |

Query rejection rate | This is the ratio calculated by dividing the number of query requests rejected by the cluster by the total number of query requests in one statistical period. Calculation rule: two metrics are collected once every statistical period, i.e., the number of historical query requests rejected (_nodes/stats/thread_pool/search/rejected) and the total number of historical query requests (_nodes/stats/thread_pool/search/completed), and the difference between two adjacent records (i.e., the absolute value in one statistical period) is taken for calculation (number of rejected query requests / total number of query requests). | When the write QPS is too large or the CPU and memory utilization is too high, the cluster's query rejection rate may increase. Generally, this is because that the current configuration of the cluster cannot meet the requirements of read operations on the business side. If this value is too high, you are recommended to upgrade the cluster node specification so as to improve the processing capabilities of the cluster nodes. |

Total documents | Total number of documents written to the cluster. Calculation rule: ES cluster document quantity API (_cluster/stats/indices/docs/count). | - |

Auto snapshot backup status | The backup result after auto snapshot backup is enabled for the cluster. 0: auto backup is not enabled; 1: auto backup is normal; -1: auto backup failed. | Auto snapshot backup will periodically back up the cluster data to COS, so that the data can be recovered when needed, thus more comprehensively ensuring data security. We recommend you enable it. For more information, please see Automatic Snapshot Backup. |