Migration from MySQL to TencentDB for MySQL

Last updated: 2024-12-25 14:55:07

Overview

This document describes how to use the data migration feature of DTS to migrate data from MySQL to TencentDB for MySQL.

The following deployment modes of the source database are supported:

Self-built MySQL.

TencentDB for MySQL.

MySQL in other clouds: Alibaba Cloud ApsaraDB RDS, Alibaba Cloud ApsaraDB for PolarDB, Amazon RDS, and Amazon Aurora.

Business Impact

1. DTS performs a read operation of the source database’s entire data during full data migration, which increases the source database’s load. If the database specifications are low, it is recommended to schedule the migration during off-peak hours or reduce the DTS speed before you start the task.

The impact varies with the specifications of the source database. For example, with a source database of 8 cores and 16 GB, DTS tasks adopt 8-thread concurrency (adjustable) by default. In a network without bottlenecks, the impact on the source database’s performance is as follows:

DTS full export phase: Use approximately 18%-45% of the source database’s CPU, increase query pressure by about 40-60 MB/s, and occupy roughly 8 active session connections.

DTS incremental export phase: Poses minimal pressure on the source database, with only one connection monitoring the binlog of the source database in real time.

2. Migration is implemented without locks by default, during which no global lock (the FTWRL lock) is added to the source database, and only tables without a primary key are locked.

3. When you create a data consistency check task, DTS will use the account that executes the migration task to write the system database

__tencentdb__ in the source database to record the data comparison information during the migration task.To ensure that subsequent data problems can be located, the

__tencentdb__ system database in the source database will not be deleted after the migration task ends.The

__tencentdb__ system database uses a single-threaded connection wait mechanism and occupies a very small space, about 0.01%–0.1% of the storage space of the source database; for example, if the source database is 50 GB, __tencentdb__ will be about 5–50 MB. Therefore, it has almost no impact on the performance of the source database and will not preempt resources.Preparation

1. Ensure that the access channel between DTS and the database is established based on the access type you intend to use. For details, see Network Preparation Work.

2. Grant the required permissions to the task execution account in the source database, as detailed below:

Migration of the entire instance:

CREATE USER 'migration_account'@'%' IDENTIFIED BY 'migration password';GRANT RELOAD, LOCK TABLES, REPLICATION CLIENT, REPLICATION SLAVE, SHOW DATABASES, SHOW VIEW, PROCESS ON *.* TO 'migration_account'@'%';//If the Source Database is Alibaba Cloud Database, SHOW DATABASES authorization is not required, but at least one Non-system Database needs to be created. Otherwise, the Pre-validation Task will fail. Authorization is required for other non-Alibaba Cloud Database scenarios. For Alibaba Cloud Database authorization, please refer to https://help.aliyun.com/document_detail/96101.html//If you choose to migrate triggers and events, TRIGGER and EVENT permissions are requiredGRANT ALL PRIVILEGES ON `__tencentdb__`.* TO 'migration_account'@'%';GRANT SELECT ON *.* TO 'migration_account';

Migration of specified objects:

CREATE USER 'migration_account'@'%' IDENTIFIED BY 'migration password';GRANT RELOAD, LOCK TABLES, REPLICATION CLIENT, REPLICATION SLAVE, SHOW DATABASES, SHOW VIEW, PROCESS ON *.* TO 'migration_account'@'%';//If the Source Database is Alibaba Cloud Database, SHOW DATABASES authorization is not required, but at least one Non-system Database needs to be created. Otherwise, the Pre-validation Task will fail. Authorization is required for other non-Alibaba Cloud Database scenarios. For Alibaba Cloud Database authorization, please refer to https://help.aliyun.com/document_detail/96101.html//If you choose to migrate triggers and events, you need to grant both TRIGGER and EVENT permissionsGRANT ALL PRIVILEGES ON `__tencentdb__`.* TO 'migration_account'@'%';GRANT SELECT ON `mysql`.* TO 'migration_account'@'%';GRANT SELECT ON libraries to be migrated.* TO 'migration_account';

3. Grant the task execution account the following required permissions in the target database:

ALTER, ALTER ROUTINE, CREATE, CREATE ROUTINE, CREATE TEMPORARY TABLES, CREATE USER, CREATE VIEW, DELETE, DROP, EVENT, EXECUTE, INDEX, INSERT, LOCK TABLES, PROCESS, REFERENCES, RELOAD, SELECT, SHOW DATABASES, SHOW VIEW, TRIGGER, UPDATE.

Usage Instructions

Migration Objects

1. Basic tables, views, functions, triggers, stored procedures, and events can be migrated. System database tables, such as

information_schema , sys , performance_schema , __cdb_recycle_bin__ , __recycle_bin__ , __tencentdb__ , and mysql can not be migrated.2. Related data objects should be migrated together to avoid migration failure. Common relationships include views referencing tables, views referencing other views, and primary-foreign key relationships.

3. It is recommended to migrate tables with primary keys or non-null unique keys to prevent data duplication.

4. When migrating views, stored procedures, and functions, DTS checks whether user1, corresponding to the

DEFINER ([DEFINER = user1]) in the source database, matches the migration account user2. If they do not match, after migration, DTS modifies the SQL SECURITY attribute of user1 in the target database from DEFINER to INVOKER ([INVOKER = user1]), and sets the DEFINER in the target database to the migration account user2 ([DEFINER = migration account user2]). If the view definitions in the source database are too complex, the migration task may fail.5. When the source database is Alibaba Cloud RDS or PolarDB, since RDS and PolarDB add additional primary key columns to tables without primary keys or non-null unique keys in the Binlog (which are not visible in the table structure), DTS may fail to recognize them. Therefore, it is recommended to avoid migrating tables without primary keys.

6. Only databases using the InnoDB, MyISAM, or TokuDB engines can be migrated. Tables using other database engines will be skipped by default. If the source database contains compressed TokuDB engine data, the target database should support compression mode for migration; otherwise, the task will fail.

7. When the target database is a Tencent Cloud MySQL instance using the RocksDB engine, the source InnoDB/TokuDB engines can be converted to the target RocksDB engine. However, there are specific constraints for converting the InnoDB to RocksDB engine:

7.1 If the primary key or part of the primary key in a table to be migrated from the source database is of the types TINYTEXT, TEXT, MEDIUMTEXT, or LONGTEXT, these types do not support value equality on the target side. Therefore, SQL statements related to value equality (such as DELETE FROM table_name WHERE primary_key_column = 'some text') will not work after synchronization, potentially leading to data inconsistency between the source and target databases.

7.2 During data consistency verification, if the primary key or part of the primary key in a table to be migrated from the source database is of the types TINYTEXT, TEXT, MEDIUMTEXT, and LONGTEXT, and the table contains more than 50,000 rows, the table will be skipped, and no consistency verification will be performed.

7.3 Due to differences in the underlying storage of DOUBLE data types between RocksDB and InnoDB engines, if the source DOUBLE value approaches its upper limit (such as -1.7976931348623157E+308), false negatives may occur when you create consistency verification tasks. While the actual data may be consistent, the verification results might show discrepancies.

7.4 Tables involving partitions are not supported for migration.

Data Types

1. During incremental migration, if the source database generates Binlog statements in the

STATEMENT format, migration will fail.2. If there are gaps in the GTID of the source database’s Binlog, it may impact migration task performance and lead to task failure.

3. Scenarios where DML and DDL statements are included in the same transaction are not supported. In such cases, the task will return an error.

4. Geometry-related data types are not supported. Tasks involving these data types will fail.

5.

ALTER VIEW statements are not supported. Such statements will be skipped and not migrated.6. JSON data type specifics for migration linkages between MySQL and TDSQL-C MySQL

6.1 For data requiring precision, it is recommended to use types such as DECIMAL for separate recording rather than JSON. This is because MySQL’s handling of floats in JSON can result in a loss of precision for numbers with six or more decimal places.

6.2 DTS handling of floats in JSON types

When MySQL 5.7 or earlier is the target database, due to issues inherent in MySQL 5.7, floats x.0 in JSON on the source may be converted to x on the target during synchronization. For example, source data {"a":12.0} would be synchronized to the target as {"a":12}.

6.2.1 When MySQL 5.7 or earlier is the source database and MySQL 8.0 or later is the target database, due to issues inherent in MySQL 5.7, the float x.0 in JSON on the source might appear as x when read via SELECT. However, the binlog still records the value as a floating-point type, and DTS processes it accordingly, resulting in x.0 on the target database. For example, source data {"a":12.0} may appear as {"a":12} in SELECT results but will be synchronized as {"a":12.0} to the target.

6.2.2 Regardless of the version, if the float in the JSON type from the source database has more than one decimal place, such as {"a":12.00} or {"a":12.000}, it will be synchronized to the target database as {"a":12.0}.

Additional Instructions

1. If the source database is a non-GTID instance, DTS does not support HA switch at the source side. If the source MySQL switches, it may cause the DTS incremental migration to be interrupted.

2. It is recommended not to perform dual writes on the target database during the task, as this may cause task failures or inconsistencies in the synchronized data.

Full synchronization phase: When DTS writes the initial full data to the target database and detects primary key conflicts, the task will report an error .

Incremental synchronization phase: When DTS writes newly added data from the full synchronization phase into the target database and detects primary key conflicts, it will overwrite the target database with data from the source. When DTS writes newly added data from the incremental synchronization phase to the target database and detects primary key conflicts, the task will report an error .

Operation Restrictions

Avoid performing the following operations during migration to prevent migration task failures:

1. In scenarios involving structural migration and full migration, DDL operations are not supported during the Full Export step of the migration task.

2. Do not modify or delete user information (including usernames, passwords, and permissions) or port numbers in the source or target databases.

3. Avoid executing operations to clear Binlog on the source database.

Supported SQL operations

Operation Type | Supported SQL Operation |

DML | INSERT, UPDATE, DELETE, REPLACE |

DDL | TABLE: CREATE TABLE, ALTER TABLE, DROP TABLE, TRUNCATE TABLE, RENAME TABLE VIEW: CREATE VIEW, DROP VIEW INDEX: CREATE INDEX, DROP INDEX DATABASE: CREATE DATABASE, ALTER DATABASE, DROP DATABASE CREATE TABLE table name AS SELECT is not supported. |

Directions

1. Log in to the DTS console, select Data Migration on the left sidebar, and click Create Migration Task to enter the Create Migration Task page.

2. On the Create Migration Task page, select the types, regions, and specifications of the source and target instances and click Buy Now.

Configuration Item | Description |

Creation Mode | Select Create task. |

Billing Mode | Only the pay-as-you-go billing mode is supported. After the purchase is completed, fees will be charged only in the incremental migration stage but not task configuration and full migration stages. However, due to Tencent Cloud's unified requirements for pay-as-you-go billing, the fees for one hour's usage will be frozen after the purchase. For detailed billing rules, see Billing Overview. |

Source Instance Type | Select the source database type, which cannot be changed after purchase. Here, select MySQL. |

Source Instance Region | This refers to the source region of the DTS service. If the source database is TencentDB, select its region here. If the source database is self-built or in another cloud, select the region closest to it, so that DTS can choose the optimal migration path to reduce the migration time. |

Target Instance Type | Select the target database type, which cannot be changed after purchase. Here, select MySQL. |

Target Instance Region | Select the target database region. |

Specification | Select the specification of the migration link based on your business conditions. For the performance and billing details of different specifications, see Billing Overview. |

Quantity | You can purchase up to 10 migration tasks at a time. |

3. After the purchase is completed, the page automatically redirects to the data migration task list. Select the task you just purchased for configuration. If you have purchased tasks across multiple regions or for cross-region DTS, the task list displays them based on the target instance’s region. You can switch regions at the top of the page to locate the purchased tasks.

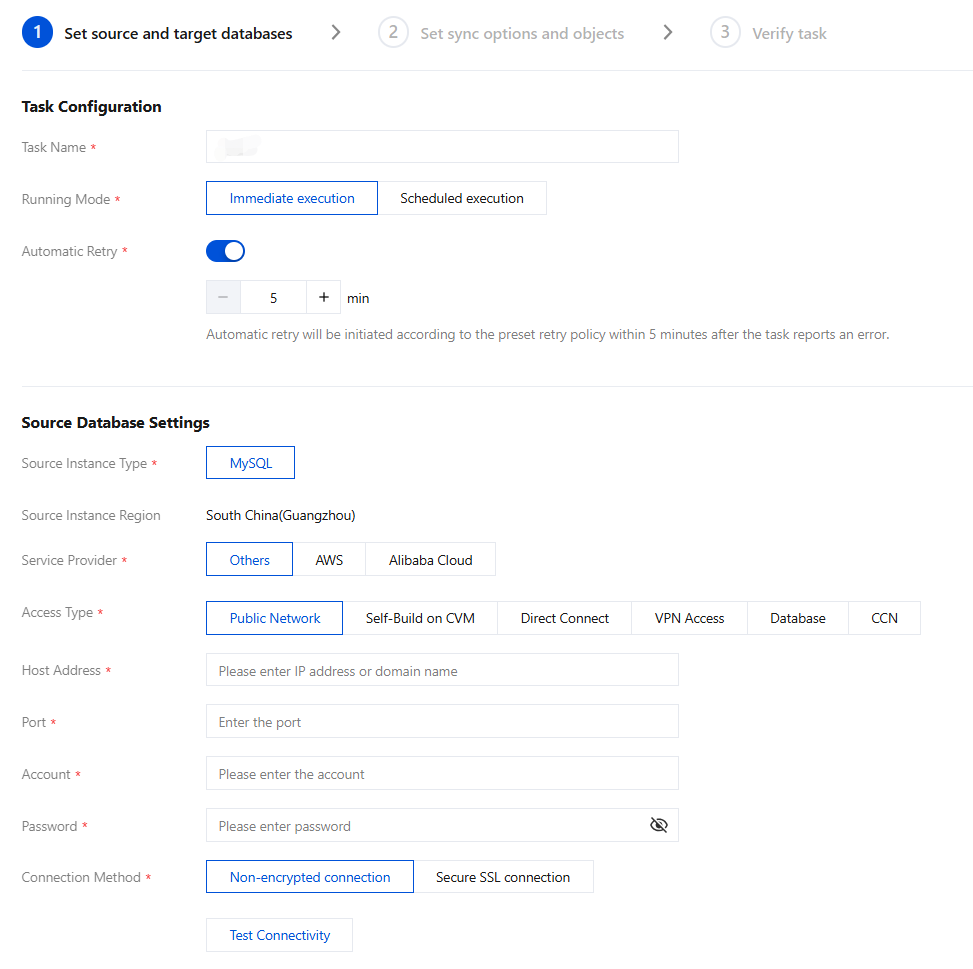

4. On the Set source and target databases page, configure the task, source database, and target database settings. After the source and target databases pass the connectivity test, click Save.

Notes:

If the connectivity test fails, troubleshoot as prompted or as instructed in Database Connection Check and try again.

Task Configuration

Parameter | Description |

Task Name | DTS automatically generates a task name. It is recommended to change it to a meaningful name for easier task identification. |

Running Mode | Immediate execution: The task is started immediately after passing the pre-verifications. Scheduled execution: Set a specific start time for the task. The task does not start immediately after passing the pre-verifications but waits until the scheduled time. |

Automatic Retry | Once it is enabled, if a synchronization task is temporarily interrupted due to network issues or similar causes, DTS will automatically retry and resume the task within the specified time frame without requiring manual intervention. The supported range is 5 to 720 minutes. |

Source Database Settings

Parameter | Description |

Source Instance Type | The type of source instance selected at the time of purchase, which cannot be modified. |

Source Instance Region | The region of the source instance selected at the time of purchase, which cannot be modified. |

Service Provider | For self-built databases (including those on CVMs), Tencent Cloud database instances, and lightweight databases, select Others. For third-party cloud provider databases, select the corresponding service provider. |

Access Type | Choose based on the deployment setup of the source database. Different deployment scenarios require specific network configurations. For details, see Network Preparation Work Overview. For source databases hosted on self-built IDC environments or other cloud providers, the access types include Public network/DC/VPN access/CCN. Public Network: The source database can be accessed via a public IP address. Direct Connect: The source database can connect to Tencent Cloud VPC using DC. VPN Access: The source database can connect to Tencent Cloud VPC using VPN Connections. CCN: The source database can connect to Tencent Cloud VPC using CCN. VPC: Both the source data and target database are deployed on Tencent Cloud and within a VPC. If you need to use the VPC access type, submit a ticket for application. If the source database is self-built on a CVM, select Self-built on CVM as the access type. If the source database is a Tencent Cloud database instance, select Cloud Database as the access type. |

Public Network | When Public Network is selected as the Access Type, configure the following parameters: Host Address: The IP address or domain name of the source database. Port: The port used by the source database. |

Self-Build on CVM | When Self-Build on CVM is selected as the Access Type, configure the following parameters: CVM Instance: The instance ID of the CVM. Port: The port used by the source database. |

Direct Connect | When Direct Connect is selected as the Access Type, configure the following parameters: VPC-Based Direct Connect Gateway: Only VPC Direct Connect Gateway is supported for DC. Confirm the network type associated with the gateway. VPC: Select the VPC and subnet. Host Address: The IP address of the source database. Port: The port used by the source database. |

VPN Access | When VPN Access is selected as the Access Type, configure the following parameters: VPN gateway: Select the VPN gateway based on its ID. VPC: Select the VPC and subnet associated with the VPN gateway. Host Address: The IP address of the source database. Port: The port used by the source database. |

Database | When Database is selected as the Access Type, configure the following parameters: Cross-/Intra-Account Intra-account: The source and target database instances belong to the same Tencent Cloud root account. Cross-account: The source and target database instances belong to different Tencent Cloud root accounts. For cross-account operations, see Cloud Database Cross-Account Instance Synchronization. Database Instance: The instance ID of the source database. |

CCN | When CCN is selected as the Access Type, configure the following parameters: CCN access supports both same-account and cross-account configurations. Due to the complexity of network configuration, see Migrate Self-built Database to Tencent Cloud Database via CCN for detailed guidance. Host Network Environment: Select based on the actual scenario. For example, select Tencent Cloud if the source database is a Tencent Cloud database instance, Self-built IDC if it is a self-built IDC database, or the corresponding network for databases from other cloud providers. Host Address: The host IP address of the source database. Port: The port used by the source database. CCN Instance Account Type My account: The CCN resources and target database belong to the same Tencent Cloud root account. Other account: The CCN resources and target database belong to different Tencent Cloud root accounts. VPC-Based CCN Instance: Name of the CCN instance. CCN-associated VPC and subnet: The CCN-associated VPC refers to the VPC connected to the synchronization linkage within the CCN. Select a VPC from all the VPCs associated with the CCN, excluding the VPC of the source database. Region of the VPC: The region of the VPC used for connection should match the region of the source database selected during task purchase. If there is a mismatch, DTS will automatically update the source database region to align with the VPC region. |

Account/Password | Account/Password: The account and password of the source database. |

Connection Method | Currently, if users want to experience the SSL secure connection feature, submit a ticket for application. SSL secure connection refers to encrypting the transmission linkage between DTS and the database using SSL. Enabling SSL secure connection may increase the connection response time of the database. Generally, Tencent Cloud’s private network linkage is relatively secure, and enabling SSL secure connection is not necessary. However, for scenarios using public network, DC, or other transmission methods where higher data security is required, enabling SSL secure connection is recommended. Ensure that SSL encryption is enabled in the database before selecting SSL secure connection. |

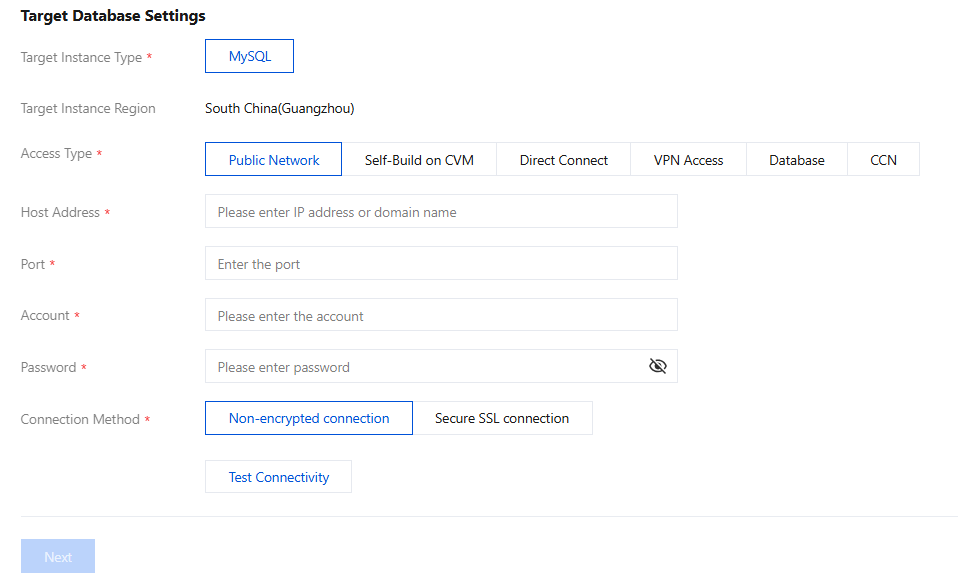

Target Database Settings

The parameter configuration for the target database is similar to that of the source database. Select the access type based on the actual situation; further explanation is omitted here.

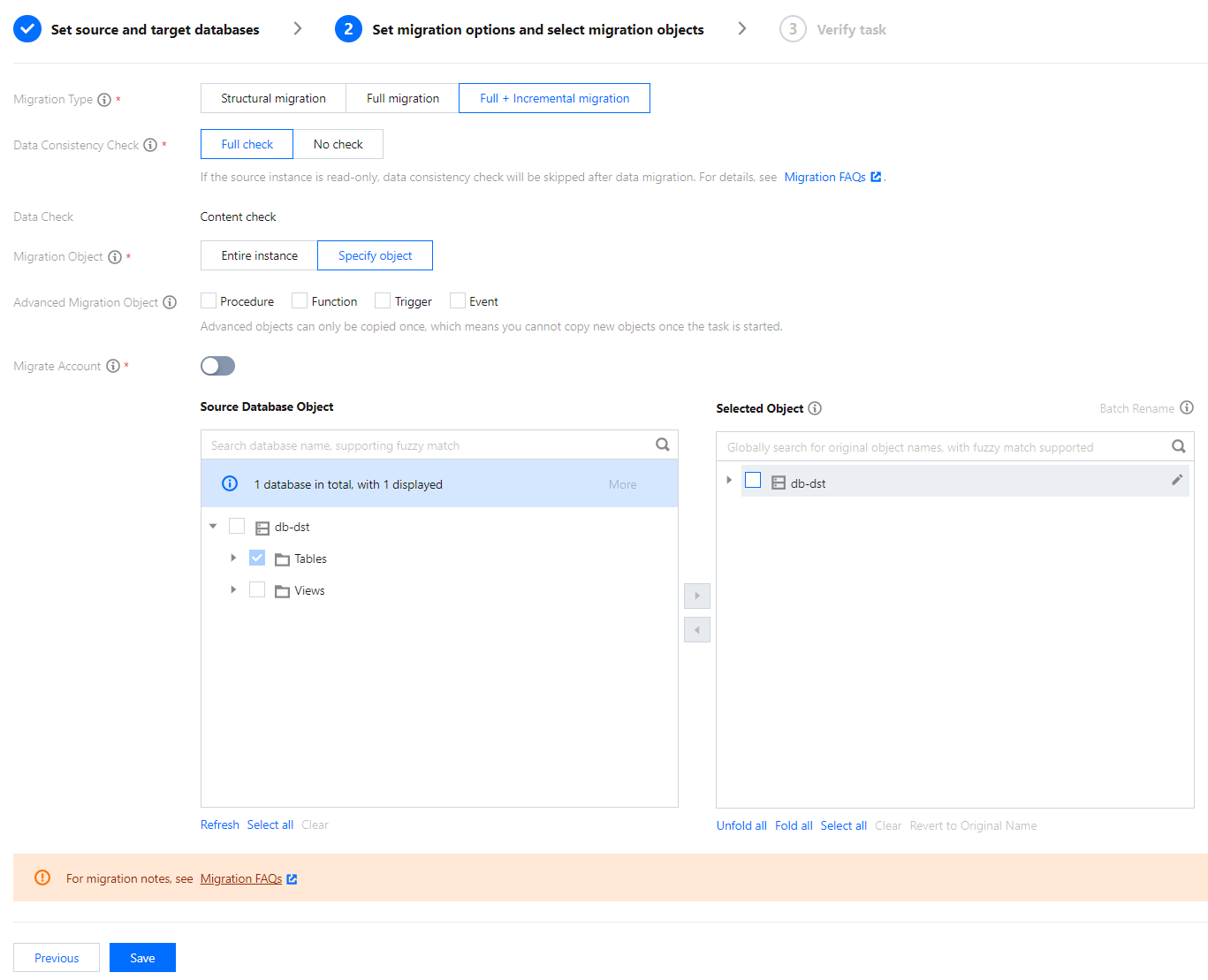

5. On the Set migration options and select migration objects page, configure the migration type and objects and click Save.

Notes:

If you want to rename a table (for example, rename table A table B) during migration, you must select the entire database (or entire instance) where table A resides rather than only table A as the migration object; otherwise, the system will report an error.

Configuration Item | Description |

Migration Type | Select an option based on your scenario. Structural migration: Structured data such as databases and tables in the database will be migrated. Full migration: The database/table structure and data of the entire database will be migrated. The migrated data will only be existing content of the source database when the task is initiated but not include the incremental data written to the source database after the task is initiated. Full + Incremental migration: The database/table structure and data of the entire database will be migrated. The migrated data will include the existing content of the source database when the task is initiated as well as the incremental data written to the source database after the task is initiated. If there are data writes to the source database during migration, and you want to smoothly migrate the data in a non-stop manner, select this option. |

Data Consistency Check | If Full + Incremental migration is selected, you can perform data consistency check to carefully compare the data in the source and target databases after migration. If Full check is selected, when the migration task enters the "incremental sync" step, the time lag between them is 0 seconds, and the data gap between the target and the source databases is 0 MB, DTS will automatically trigger a data consistency check task. If Full check is not selected, you can manually trigger a check task when the task enters the "incremental sync" step. For more information, see Creating Data Consistency Check Task. |

Migration Object | Entire instance: Migrate the entire database instance excluding the system databases such as information_schema, mysql, performance_schema, and sys.Specified objects: Migrate specified objects. |

Advanced Migration Object | Procedures, functions, triggers, and events can be migrated. The migration of advanced objects is a one-time operation: only advanced objects already in the source database before the task start can be migrated, while those added to the source database after the task start will not be synced to the target database. Procedures and functions will be migrated during source database export. If there are no incremental migration tasks, triggers and events will be migrated when the task stops; otherwise, they will be migrated after you click Done, in which case the transition will take a slightly longer time. |

Selected Object | Database/Table mapping (renaming) is supported. Hover over a database or table name, click the displayed Edit icon, and enter a new name in the pop-up window. We recommend you not rename tables when migrating advanced objects; otherwise, the migration may fail. |

Sync Online DDL Temp Table | If you perform an online DDL operation on tables in the source database with the gh-ost or pt-osc tool, DTS can migrate the temp tables generated by online DDL changes to the target database. If you select gh-ost, DTS will migrate the temp tables ( _table name_ghc, _table name_gho, and _table name_del) generated by the gh-ost tool to the target database.If you select pt-osc, DTS will migrate the temp tables ( _table name_new and _table name_old) generated by the pt-osc tool to the target database. |

Advanced Options

Configuration Item | Description |

Migrate accounts | Check this feature if you need to migrate account information from the source database to the target database. For more details, see Account Migration. In migration linkages with AWS as the source, users who migrate accounts should manually install the auth_socket plugin in the target database. |

Limit transmission rate | This configuration is generally not required. It should only be adjusted when the database’s configuration is insufficient to handle the default DTS concurrent thread count and RPS. For more details, see Rate Limiting. |

6. On the task verification page, verify the task. After the verification is passed, click Start Task.

If the verification fails, fix the problem as instructed in Check Item Overview and initiate the verification again. You can skip certain check items by blocking them after the verification fails. Then, you need to initiate the verification again to continue the task.

Failed: It indicates that a check item fails and the task is blocked. You need to fix the problem and run the verification task again.

Alarm: It indicates that a check item doesn't completely meet the requirements, and the task can be continued, but the business will be affected. You need to assess whether to ignore the alarm or fix the problem and continue the task based on the alarm message.

If you select Migrate Account, the verification task will check the account information of the source database and migrate accounts meeting the requirements. DTS won't migrate ineligible accounts or will migrate them with fewer permissions. For more information, see Account Migration.

7. Return to the data migration task list, and you can see that the task has entered the Preparing status. After 1–2 minutes, the data migration task will be started.

Select Structural migration or Full migration: Once completed, the task will be stopped automatically.

Select Full + Incremental migration: After full migration is completed, the migration task will automatically enter the incremental data sync stage, which will not stop automatically. You need to click Complete to manually stop the incremental data migration.

Manually complete incremental data migration and business switchover at appropriate time.

Check whether the migration task is in the incremental migration stage without any lag. If so, stop writing data to the source database for a few minutes.

Manually complete incremental migration when the data gap between the target and the source databases is 0 KB and the time lag between them is 0 seconds.

8. (Optional) If you want to view, delete, or perform other operations on a task, click the task and select the target operation in the Operation column. For more information, see Viewing Task.

9. After the migration task status becomes Task successful, you can formally cut over the business. For more information, see Cutover Description.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback