- Release Notes and Announcements

- Release Notes

- Announcements

- Security Vulnerability Fix Description

- Host Operation System Release for Super Node Pods (Mitigated NodeLost Issue)

- TKE Native Node Sub-product Name Change Notice

- Announcement on Authentication Upgrade of Some TKE APIs

- Discontinuing Update of NginxIngress Addon

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Instructions on Cluster Resource Quota Adjustment

- Decommissioning Kubernetes Version

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Changing the Cluster Operating System

- Creating a Cluster (New)

- Deleting a Cluster

- Cluster Scaling

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Native Node Parameters

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Creating Native Nodes

- Modifying Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling Public Network Access for a Native Node

- Management Parameters

- Enabling SSH Key Login for a Native Node

- FAQs for Native Nodes

- Supernode management

- Registered Node Management

- Memory Compression Instructions

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- CronJob Management

- Job Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Service Management

- Ingress Management

- Storage Management

- Policy Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- Cluster Autoscaler

- OOMGuard

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- DNSAutoscaler

- COS-CSI

- CFS-CSI

- CFSTURBO-CSI

- CBS-CSI Description

- UserGroupAccessControl

- TCR Introduction

- TCR Hosts Updater

- DynamicScheduler

- DeScheduler

- Network Policy

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- tke-log-agent

- GPU-Manager Add-on

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Registered Cluster Guide

- TKE Insight

- TKE Scheduling

- Cloud Native Service Guide

- Practical Tutorial

- Cluster

- Cluster Migration

- Serverless Cluster

- Scheduling

- Security

- Service Deployment

- Network

- DNS

- Self-Built Nginx Ingress Practice Tutorial

- Quick Start

- Custom Load Balancer

- Enabling CLB Direct Connection

- Optimization for High Concurrency Scenarios

- High Availability Configuration Optimization

- Observability Integration

- Access to Tencent Cloud WAF

- Installing Multiple Nginx Ingress Controllers

- Migrating from TKE Nginx Ingress Plugin to Self-Built Nginx Ingress

- Complete Example of values.yaml Configuration

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- KEDA

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Implementing elasticity based on traffic prediction with EHPA

- Implementing Horizontal Scaling based on CLB monitoring metrics using KEDA in TKE

- Containerization

- Microservice

- Cost Management

- Hybrid Cloud

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Common Service & Ingress Errors and Solutions

- Engel Ingres appears in Connechtin Reverside

- CLB Ingress Creation Error

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- CLB Loopback

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Elastic Cluster APIs

- Resource Reserved Coupon APIs

- Cluster APIs

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateBackupStorageLocation

- CreateCluster

- DeleteBackupStorageLocation

- DescribeBackupStorageLocations

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- UpdateClusterKubeconfig

- UpdateClusterVersion

- Third-party Node APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- CheckEdgeClusterCIDR

- DescribeAvailableTKEEdgeVersion

- DescribeECMInstances

- DescribeEdgeCVMInstances

- DescribeEdgeClusterInstances

- DescribeEdgeClusterUpgradeInfo

- DescribeTKEEdgeClusterStatus

- ForwardTKEEdgeApplicationRequestV3

- DescribeEdgeLogSwitches

- CreateECMInstances

- CreateEdgeCVMInstances

- CreateEdgeLogConfig

- DeleteECMInstances

- DeleteEdgeCVMInstances

- DeleteEdgeClusterInstances

- DeleteTKEEdgeCluster

- DescribeTKEEdgeClusterCredential

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeScript

- InstallEdgeLogAgent

- UninstallEdgeLogAgent

- UpdateEdgeClusterVersion

- DescribeTKEEdgeClusters

- CreateTKEEdgeCluster

- Cloud Native Monitoring APIs

- Scaling group APIs

- Super Node APIs

- Add-on APIs

- Other APIs

- Data Types

- Error Codes

- TKE API 2022-05-01

- FAQs

- Service Agreement

- Contact Us

- Glossary

- User Guide(Old)

- Release Notes and Announcements

- Release Notes

- Announcements

- Security Vulnerability Fix Description

- Host Operation System Release for Super Node Pods (Mitigated NodeLost Issue)

- TKE Native Node Sub-product Name Change Notice

- Announcement on Authentication Upgrade of Some TKE APIs

- Discontinuing Update of NginxIngress Addon

- qGPU Service Adjustment

- Version Upgrade of Master Add-On of TKE Managed Cluster

- Upgrading tke-monitor-agent

- Instructions on Cluster Resource Quota Adjustment

- Decommissioning Kubernetes Version

- Deactivation of Scaling Group Feature

- Notice on TPS Discontinuation on May 16, 2022 at 10:00 (UTC +8)

- Basic Monitoring Architecture Upgrade

- Starting Charging on Managed Clusters

- Instructions on Stopping Delivering the Kubeconfig File to Nodes

- Release Notes

- Product Introduction

- Purchase Guide

- Quick Start

- TKE General Cluster Guide

- TKE General Cluster Overview

- Purchase a TKE General Cluster

- High-risk Operations of Container Service

- Deploying Containerized Applications in the Cloud

- Open Source Components

- Permission Management

- Cluster Management

- Cluster Overview

- Cluster Hosting Modes Introduction

- Cluster Lifecycle

- Creating a Cluster

- Changing the Cluster Operating System

- Creating a Cluster (New)

- Deleting a Cluster

- Cluster Scaling

- Connecting to a Cluster

- Upgrading a Cluster

- Enabling IPVS for a Cluster

- Custom Kubernetes Component Launch Parameters

- Using KMS for Kubernetes Data Source Encryption

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Overview

- Native Node Parameters

- Purchasing Native Nodes

- Lifecycle of a Native Node

- Creating Native Nodes

- Modifying Native Nodes

- Deleting Native Nodes

- Self-Heal Rules

- Declarative Operation Practice

- Native Node Scaling

- In-place Pod Configuration Adjustment

- Enabling Public Network Access for a Native Node

- Management Parameters

- Enabling SSH Key Login for a Native Node

- FAQs for Native Nodes

- Supernode management

- Registered Node Management

- Memory Compression Instructions

- GPU Share

- Kubernetes Object Management

- Overview

- Namespace

- Workload

- Deployment Management

- StatefulSet Management

- DaemonSet Management

- CronJob Management

- Job Management

- Setting the Resource Limit of Workload

- Setting the Scheduling Rule for a Workload

- Setting the Health Check for a Workload

- Setting the Run Command and Parameter for a Workload

- Using a Container Image in a TCR Enterprise Instance to Create a Workload

- Auto Scaling

- Configuration

- Service Management

- Ingress Management

- Storage Management

- Policy Management

- Application and Add-On Feature Management Description

- Add-On Management

- Add-on Overview

- Add-On Lifecycle Management

- Cluster Autoscaler

- OOMGuard

- NodeProblemDetectorPlus Add-on

- NodeLocalDNSCache

- DNSAutoscaler

- COS-CSI

- CFS-CSI

- CFSTURBO-CSI

- CBS-CSI Description

- UserGroupAccessControl

- TCR Introduction

- TCR Hosts Updater

- DynamicScheduler

- DeScheduler

- Network Policy

- Nginx-ingress

- HPC

- Description of tke-monitor-agent

- tke-log-agent

- GPU-Manager Add-on

- Helm Application

- Application Market

- Network Management

- Container Network Overview

- GlobalRouter Mode

- VPC-CNI Mode

- VPC-CNI Mode

- Multiple Pods with Shared ENI Mode

- Pods with Exclusive ENI Mode

- Static IP Address Mode Instructions

- Non-static IP Address Mode Instructions

- Interconnection Between VPC-CNI and Other Cloud Resources/IDC Resources

- Security Group of VPC-CNI Mode

- Instructions on Binding an EIP to a Pod

- VPC-CNI Component Description

- Limits on the Number of Pods in VPC-CNI Mode

- Cilium-Overlay Mode

- OPS Center

- Log Management

- Backup Center

- Remote Terminals

- TKE Serverless Cluster Guide

- TKE Registered Cluster Guide

- TKE Insight

- TKE Scheduling

- Cloud Native Service Guide

- Practical Tutorial

- Cluster

- Cluster Migration

- Serverless Cluster

- Scheduling

- Security

- Service Deployment

- Network

- DNS

- Self-Built Nginx Ingress Practice Tutorial

- Quick Start

- Custom Load Balancer

- Enabling CLB Direct Connection

- Optimization for High Concurrency Scenarios

- High Availability Configuration Optimization

- Observability Integration

- Access to Tencent Cloud WAF

- Installing Multiple Nginx Ingress Controllers

- Migrating from TKE Nginx Ingress Plugin to Self-Built Nginx Ingress

- Complete Example of values.yaml Configuration

- Using Network Policy for Network Access Control

- Deploying NGINX Ingress on TKE

- Nginx Ingress High-Concurrency Practices

- Nginx Ingress Best Practices

- Limiting the bandwidth on pods in TKE

- Directly connecting TKE to the CLB of pods based on the ENI

- Use CLB-Pod Direct Connection on TKE

- Obtaining the Real Client Source IP in TKE

- Using Traefik Ingress in TKE

- Release

- Logs

- Monitoring

- OPS

- Removing and Re-adding Nodes from and to Cluster

- Using Ansible to Batch Operate TKE Nodes

- Using Cluster Audit for Troubleshooting

- Renewing a TKE Ingress Certificate

- Using cert-manager to Issue Free Certificates

- Using cert-manager to Issue Free Certificate for DNSPod Domain Name

- Using the TKE NPDPlus Plug-In to Enhance the Self-Healing Capability of Nodes

- Using kubecm to Manage Multiple Clusters kubeconfig

- Quick Troubleshooting Using TKE Audit and Event Services

- Customizing RBAC Authorization in TKE

- Clearing De-registered Tencent Cloud Account Resources

- Terraform

- DevOps

- Auto Scaling

- KEDA

- Cluster Auto Scaling Practices

- Using tke-autoscaling-placeholder to Implement Auto Scaling in Seconds

- Installing metrics-server on TKE

- Using Custom Metrics for Auto Scaling in TKE

- Utilizing HPA to Auto Scale Businesses on TKE

- Using VPA to Realize Pod Scaling up and Scaling down in TKE

- Adjusting HPA Scaling Sensitivity Based on Different Business Scenarios

- Implementing elasticity based on traffic prediction with EHPA

- Implementing Horizontal Scaling based on CLB monitoring metrics using KEDA in TKE

- Containerization

- Microservice

- Cost Management

- Hybrid Cloud

- Fault Handling

- Disk Full

- High Workload

- Memory Fragmentation

- Cluster DNS Troubleshooting

- Cluster kube-proxy Troubleshooting

- Cluster API Server Inaccessibility Troubleshooting

- Service and Ingress Inaccessibility Troubleshooting

- Common Service & Ingress Errors and Solutions

- Engel Ingres appears in Connechtin Reverside

- CLB Ingress Creation Error

- Troubleshooting for Pod Network Inaccessibility

- Pod Status Exception and Handling

- Authorizing Tencent Cloud OPS Team for Troubleshooting

- CLB Loopback

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- Elastic Cluster APIs

- Resource Reserved Coupon APIs

- Cluster APIs

- AcquireClusterAdminRole

- CreateClusterEndpoint

- CreateClusterEndpointVip

- DeleteCluster

- DeleteClusterEndpoint

- DeleteClusterEndpointVip

- DescribeAvailableClusterVersion

- DescribeClusterAuthenticationOptions

- DescribeClusterCommonNames

- DescribeClusterEndpointStatus

- DescribeClusterEndpointVipStatus

- DescribeClusterEndpoints

- DescribeClusterKubeconfig

- DescribeClusterLevelAttribute

- DescribeClusterLevelChangeRecords

- DescribeClusterSecurity

- DescribeClusterStatus

- DescribeClusters

- DescribeEdgeAvailableExtraArgs

- DescribeEdgeClusterExtraArgs

- DescribeResourceUsage

- DisableClusterDeletionProtection

- EnableClusterDeletionProtection

- GetClusterLevelPrice

- GetUpgradeInstanceProgress

- ModifyClusterAttribute

- ModifyClusterAuthenticationOptions

- ModifyClusterEndpointSP

- UpgradeClusterInstances

- CreateBackupStorageLocation

- CreateCluster

- DeleteBackupStorageLocation

- DescribeBackupStorageLocations

- DescribeEncryptionStatus

- DisableEncryptionProtection

- EnableEncryptionProtection

- UpdateClusterKubeconfig

- UpdateClusterVersion

- Third-party Node APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- CheckEdgeClusterCIDR

- DescribeAvailableTKEEdgeVersion

- DescribeECMInstances

- DescribeEdgeCVMInstances

- DescribeEdgeClusterInstances

- DescribeEdgeClusterUpgradeInfo

- DescribeTKEEdgeClusterStatus

- ForwardTKEEdgeApplicationRequestV3

- DescribeEdgeLogSwitches

- CreateECMInstances

- CreateEdgeCVMInstances

- CreateEdgeLogConfig

- DeleteECMInstances

- DeleteEdgeCVMInstances

- DeleteEdgeClusterInstances

- DeleteTKEEdgeCluster

- DescribeTKEEdgeClusterCredential

- DescribeTKEEdgeExternalKubeconfig

- DescribeTKEEdgeScript

- InstallEdgeLogAgent

- UninstallEdgeLogAgent

- UpdateEdgeClusterVersion

- DescribeTKEEdgeClusters

- CreateTKEEdgeCluster

- Cloud Native Monitoring APIs

- Scaling group APIs

- Super Node APIs

- Add-on APIs

- Other APIs

- Data Types

- Error Codes

- TKE API 2022-05-01

- FAQs

- Service Agreement

- Contact Us

- Glossary

- User Guide(Old)

Introduction

TKE Backup Center provides integrated solutions for the backup, restoration and migration of applications. This document describes how to create a backup repository.

Prerequisite

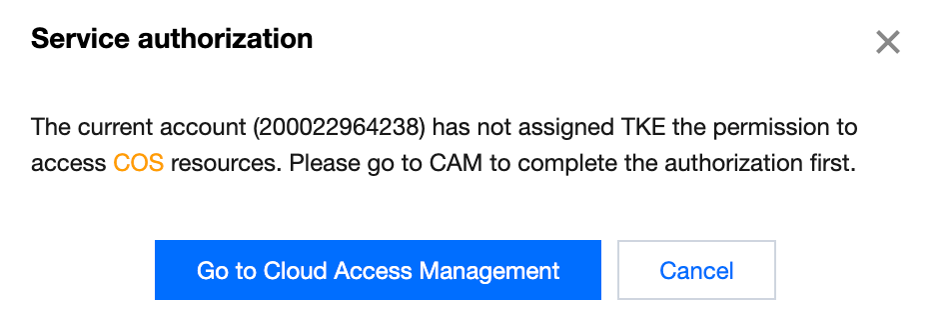

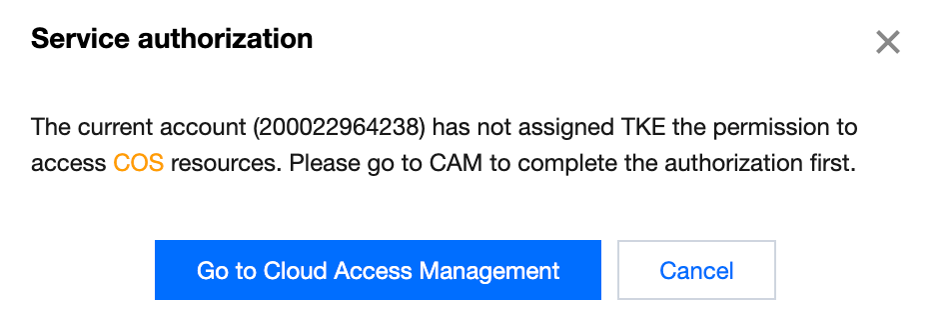

Log in to the COS console. Create a COS bucket, which is used as the underlying storage of the backup repository. A TKE role accesses your COS bucket with the minimum required permission. The bucket name must start with tke-backup. For operation details, see Creating A Bucket.

Grant read-write permission on COS objects to the TKE role. Assign the policy

QcloudAccessForTKERoleInCOSObject to the role TKE_QCSRole.

Note:

Directions

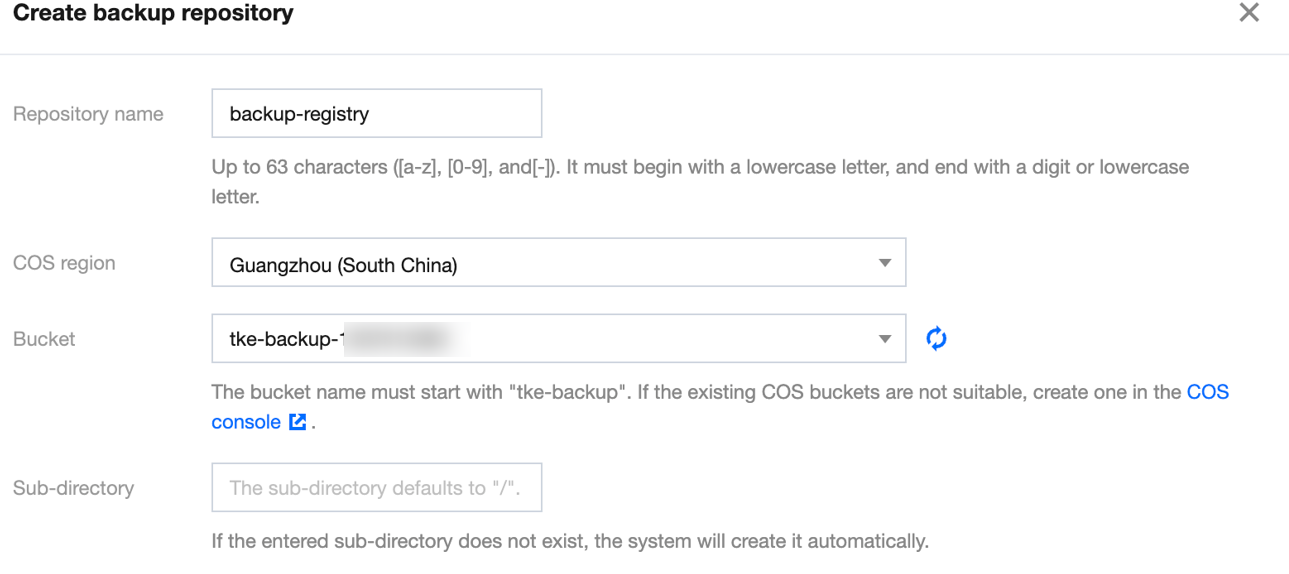

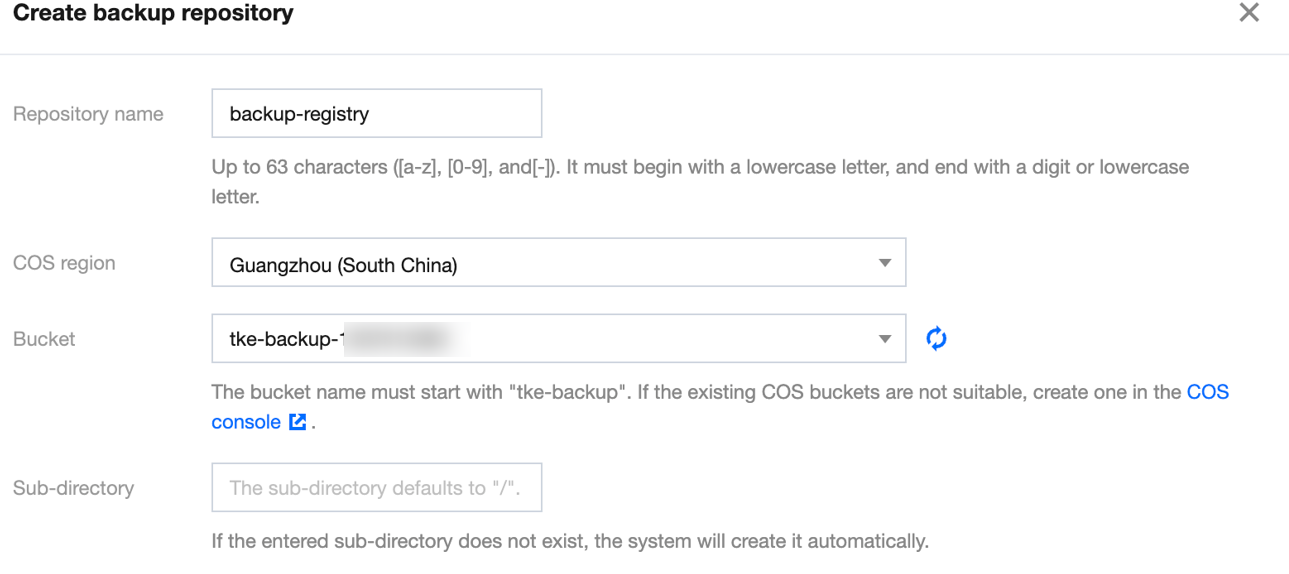

1. Log in to the TKE console and select Backup Center > Backup Repository in the left sidebar.

2. Click Create on the page that appears.

3. Enter the basic information of the repository.

Repository name: The name of the backup repository.

COS region: Select the region for COS.

Bucket: The bucket name must start with "tke-backup". If the existing buckets are not suitable, please create one in the COS console.

4. Click OK.

Note:

A backup repository can be used by multiple TKE clusters.

When a repository is deleted, the backup resources associated with it cannot be restored normally.

When a repository is deleted, the underlying storage resources are not affected. You can go to the COS console for further operations.

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?