Hive JDBC Access

Last updated: 2024-09-04 11:16:44

Supported Engine Types

Standard Spark Engine

Environment Preparation

Dependency: JDK 1.8

JDBC download: Click to download hive-jdbc-3.1.2-standalone.jar

Connecting to the Standard Spark Engine

Creating a Service Access Link

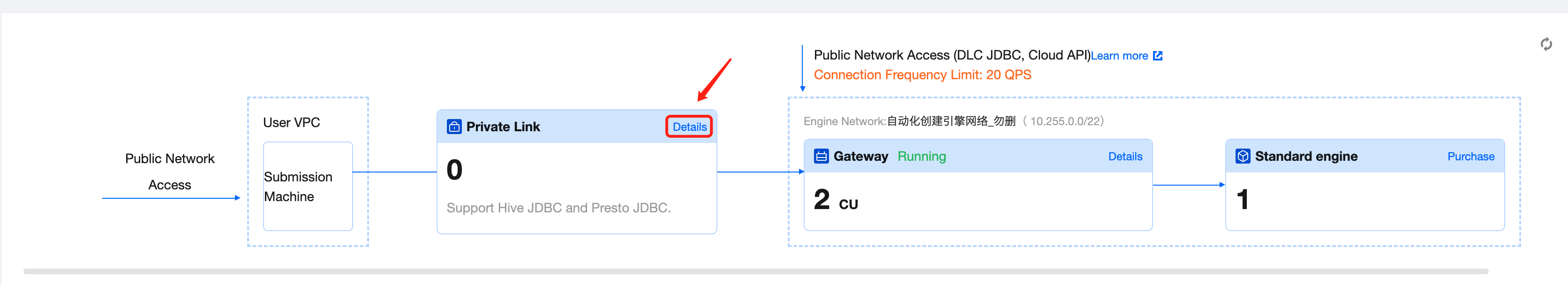

Go to the Standard engine page and click the Details button for the gateway to access the gateway details page:

Click Create Private Link, select the VPC and subnet where the submission machine is located, and click Create. This will generate two access links, one for the Hive2 protocol and one for the Presto protocol. Use the Hive2 protocol to connect to the Standard Spark Engine, as shown in the diagram below.

Note:

Creating a private connection will establish a network link between the engine network and the selected VPC. The submission machine can be any server within the selected VPC that is accessible and can be used for task submission. If there is no submission machine available in the selected VPC, you can create a new server to serve as the submission machine.

jdbc:hive2://{endpoint}:10009/?spark.engine={DataEngineName};spark.resourcegroup={ResourceGroupName};secretkey={SecretKey};secretid={SecretId};region={Region};kyuubi.engine.type=SPARK_SQL;kyuubi.engine.share.level=ENGINE

Loading the JDBC Driver

Class.forName("org.apache.hive.jdbc.HiveDriver");

Creating a Connection Using DriverManager

jdbc:hive2://{endpoint}:10009/?spark.engine={DataEngineName};spark.resourcegroup={ResourceGroupName};secretkey={SecretKey};secretid={SecretId};region={Region};kyuubi.engine.type=SPARK_SQL;kyuubi.engine.share.level=ENGINEProperties properties = new Properties();properties.setProperty("user", {AppId});Connection cnct = DriverManager.getConnection(url, properties);

JDBC Connection String Parameter Descriptions

Parameter | Required | Description |

spark.engine | Yes | The name of the Standard Spark Engine |

spark.resourcegroup | No | The name of the Standard Spark Engine resource group. If it is not specified, temporary resources will be created. |

secretkey | Yes | The SecretKey from Tencent Cloud API Key Management |

secretid | Yes | The SecretId from Tencent Cloud API Key Management |

region | Yes | The region. Currently, DLC services support the following: ap-nanjing, ap-beijing, ap-beijing-fsi, ap-guangzhou,ap-shanghai, ap-chengdu,ap-chongqing, na-siliconvalley, ap-singapore, ap-hongkong, na-ashburn, eu-frankfurt, ap-shanghai-fsi |

kyuubi.engine.type | Yes | Must be set to: SparkSQLTask |

kyuubi.engine.share.level | Yes | Must be set to: ENGINE |

user | Yes | The user's APPID |

Complete Example of Data Query

import org.apache.hive.jdbc.HiveStatement;import java.sql.*;import java.util.Properties;public class TestStandardSpark {public static void main(String[] args) throws SQLException {try {Class.forName("org.apache.hive.jdbc.HiveDriver");} catch (ClassNotFoundException e) {e.printStackTrace();return;}String url = "jdbc:hive2://{endpoint}:10009/?spark.engine={DataEngineName};spark.resourcegroup={ResourceGroupName};secretkey={SecretKey};secretid={SecretId};region={Region};kyuubi.engine.type=SPARK_SQL;kyuubi.engine.share.level=ENGINE";Properties properties = new Properties();properties.setProperty("user", {AppId});Connection connection = DriverManager.getConnection(url, properties);HiveStatement statement = (HiveStatement) connection.createStatement();String sql = "SELECT * FROM dlc_test LIMIT 100";statement.execute(sql);ResultSet rs = statement.getResultSet();while (rs.next()) {System.out.println(rs.getInt(1) + ":" + rs.getString(2));}rs.close();statement.close();connection.close();}}

After compilation is completed, you can upload the JAR file to the submission machine for execution.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback