TencentOS Server Features

Last updated: 2024-11-22 17:16:57

In cloud-native scenarios, Namespace and Cgroup provide basic support for resource isolation, but the overall isolation capability of the container is still incomplete. Particularly, some resource statistics in the /proc and /sys file systems are not containerized, causing some commonly used commands (such as free and top) that run inside the container not to accurately display the usage of container resources.

To address this issue, the TencentOS kernel introduces a cgroupfs solution, aiming to improve the display of container resources. cgroupfs offers a virtual file system, including the /proc and /sys files needed for services from the container perspective, with the directory structure consistent with the global procfs and sysfs to ensure compatibility with user tools. When these files are actually read, cgroupfs dynamically generates the corresponding view of container information through the context of a reader process.

Mounting cgroupfs File System

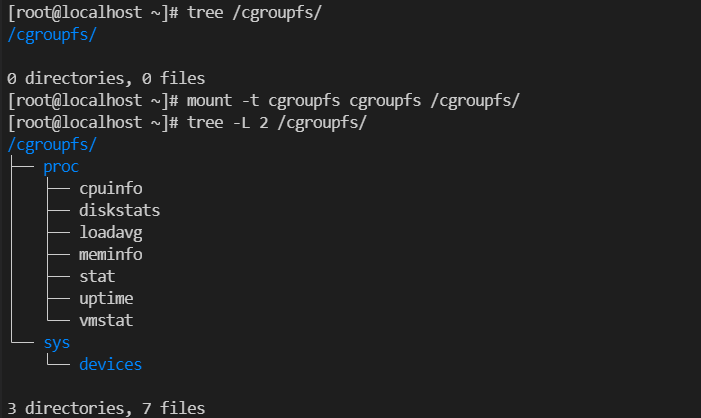

1. Run the following commands to mount a cgroupfs file system.

mount -t cgroupfs cgroupfs /cgroupfs/

The screenshot is shown as follows:

2. Mount the files from

cgroupfs to a specified container by using the option of -v. The command for enabling the docker is as follows:docker run -itd --cpus 2 --cpuset-cpus 0,1,2,4 -v/cgroupfs/sys/devices/system/cpu/:/sys/devices/system/cpu -v/cgroupfs/proc/cpuinfo:/proc/cpuinfo -v/cgroupfs/proc/stat:/proc/stat -v/cgroupfs/proc/meminfo:/proc/meminfo <image-id> /bin/bash

3. Then the information of cpuinfo, stat, and meminfo from the container perspective only can be viewed in the container.

Isolating pagecache

When the process file page is excessively used, a large amount of memory will be occupied, reducing the available memory for other services. This will make the memory allocation of a machine frequently fall into an insufficient path, which will easily trigger an "out of memory (OOM)" error. In the cloud-native scenarios, we extend the page cache of the kernel to achieve a page cache limit for a container, thereby limiting the page cache of a certain container.

pagecache Limit of the Complete Machine:

sysctl -w vm.memory_qos=1sysctl -w vm.pagecache_limit_global=1

echo x > /proc/sys/vm/pagecache_limit_ratio#(0 < x < 100) enables the page cache limit. When it is not 0, for example, 30, it means the page cache is limited to 30% of the total system memory.

The process of dirty page reclaim is relatively time-consuming.

pagecache_limit_ignore_dirty is used to determine whether to ignore dirty pages when the memory occupation of page cache is calculated. Its location is as follows:/proc/sys/vm/pagecache_limit_ignore_dirty.The default value is 1, which means to ignore dirty pages.

Set Reclaim Method for page cache:

/proc/sys/vm/pagecache_limit_async

1 means asynchronous reclaim of page cache. TencentOS will create a kernel thread of [kpclimitd], which is responsible for the reclaim of the page cache.

0 means synchronous reclaim, in which no dedicated reclaim thread will be created, and the reclaim will directly occur in the context of the process that triggers the page cache limit. The default value is 0.

pagecache Limit of Container:

In addition to supporting the pagecache limit at the global system level, TencentOS also supports the pagecache limit at the container level. The usage is as follows:

1. Enable the memory QOS:

sysctl -w vm.memory_qos=1

2. Disable the global pagecache limit:

sysctl -w vm.pagecache_limit_global=0

3. Enter the memcg where the container is located, and set the memory limit:

echo value > memory.limit_in_bytes

4. Set the maximum usage of pagecache as a percentage such as 10% of the current memory limit:

echo 10 > memory.pagecache.max_ratio

5. Set the reclaim ratio for pagecache after excess:

echo 5 > memory.pagecache.reclaim_ratio

Unified Read-Write Throttling

The original IO throttling scheme of the Linux kernel separates the read and write speeds, requiring the administrator to divide the IO bandwidth into separate read and write limits based on the service model and implement them separately. This leads to bandwidth waste. For example, if the configuration is 50 MB/s for reading and 50 MB/s for writing, but the actual IO bandwidth is 20 MB/s for reading and 50 MB/s for writing, then the bandwidth of 30 MB/s for reading will be wasted. To address this issue, TencentOS introduced a unified read-write throttling scheme. This scheme provides a unified read-write throttling configuration interface for customers in the user mode and dynamically allocates the read-write ratio based on the service traffic in the kernel mode. The usage is as follows:

Enter cgroup of the corresponding blkio service, and use the following configuration: echo MAJ:MIN VAL > FILE.

Wherein MAJ:MIN represents the device number, and FILE and VAL are as shown in the table below:

FILE | VAL |

blkio.throttle.readwrite_bps_device | Total Throttle of Read-Write Bps |

blkio.throttle.readwrite_iops_device | Total Throttle of Read-Write iops |

blkio.throttle.readwrite_dynamic_ratio | Dynamic Prediction of Read-Write Ratio: 0: Disabled. A fixed ratio of (Read: Write - 3:1) is used. 1-5: The dynamic prediction scheme is enabled. |

Throttling Support of buffer io

In the native kernel of Linux, cgroup v1 has certain defects in throttling the buffer IO. Buffer IO write-back is usually an asynchronous process. When the kernel proceeds with asynchronous dirty page flushing, it is unable to determine which blkio cgroup the IO should be submitted to, thereby unable to apply the corresponding blkio throttling policy.

Based on this, TencentOS has further improved the buffer IO throttling feature under cgroup v1, making it consistent with the buffer IO throttling feature based on cgroup v2. For cgroup v1, we provide a user mode interface, allowing the binding of the mem_cgroup that the page cache belongs to with the corresponding blkio cgroup, thereby enabling the kernel to throttle the buffer IO based on the binding information.

1. To enable the throttling feature of buffer IO, two features of kernel.io_qos and kernel.io_cgv1_buff_wb need to be enabled.

sysctl -w kernel.io_qos=1 # Enable IO QoS feature sysctl -w kernel.io_cgv1_buff_wb=1 # Enable Buffer IO feature (enabled by default)

2. To implement buffer IO throttling for containers, it is necessary to explicitly bind the corresponding memcg of the container with cgroup of blkcg. The operation is as follows:

echo /sys/fs/cgroup/blkio/A > /sys/fs/cgroup/memory/A/memory.bind_blkio

After binding, the throttling mechanism on the buffer IO resources provided by blkio cgroup for the container can be viewed through the following interfaces:

blkio.throttle.read_bps_device

blkio.throttle.write_bps_device

blkio.throttle.write_iops_device

blkio.throttle.read_iops_device

blkio.throttle.readwrite_bps_device

blkio.throttle.readwrite_iops_device

Asynchronous fork

When a large memory service executes the fork system call to create a subprocess, the duration of the fork calling process will be relatively long, causing the service to be in the kernel mode for an extended period and unable to process the service requests. Therefore, it is particularly necessary to optimize the fork time of the kernel for this scenario.

In Linux, during the default process of the processing fork in the kernel, the parent process needs to copy numerous process metadata to the subprocess, with the most time-consuming part being the copying of page tables, which usually occupies more than 97% of the fork calling time. The design philosophy of asynchronous fork is to transfer the work of copying page tables from the parent process to the subprocess, thereby shortening the time for the parent process to call the fork system call and fall into the kernel mode, making the application return to the user mode as soon as possible and handle the service requests, thereby resolving the performance jitter issue caused by fork.

This enabling and disabling of the feature is controlled by

cgroup, with the basic usage as follows:echo 1 > <cgroup directory>/memory.async_fork # Enable the asynchronous fork feature for the current cgroup directory echo 0 > <cgroup directory>/memory.async_fork # Disable the asynchronous fork feature for the current cgroup directory

The default value of this interface is 0, that is, disabled by default.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback