Tencent Kubernetes Engine

- Release Notes and Announcements

- Release Notes

- Announcements

- Security Vulnerability Fix Description

- Release Notes

- Kubernetes Version Maintenance

- Runtime Version Maintenance Description

- Product Introduction

- Quick Start

- TKE General Cluster Guide

- Purchase a TKE General Cluster

- Permission Management

- Controlling TKE cluster-level permissions

- TKE Kubernetes Object-level Permission Control

- Cluster Management

- Images

- Worker node introduction

- Normal Node Management

- Native Node Management

- Purchasing Native Nodes

- Supernode management

- Purchasing a Super Node

- Registered Node Management

- Memory Compression Instructions

- GPU Share

- qGPU Online/Offline Hybrid Deployment

- Kubernetes Object Management

- Workload

- Configuration

- Auto Scaling

- Service Management

- Ingress Management

- CLB Type Ingress

- API Gateway Type Ingress

- Storage Management

- Use File to Store CFS

- Use Cloud Disk CBS

- Add-On Management

- CBS-CSI Description

- Network Management

- GlobalRouter Mode

- VPC-CNI Mode

- Static IP Address Mode Instructions

- Cilium-Overlay Mode

- OPS Center

- Audit Management

- Event Management

- Monitoring Management

- Log Management

- Using CRD to Configure Log Collection

- Backup Center

- TKE Serverless Cluster Guide

- Purchasing a TKE Serverless Cluster

- TKE Serverless Cluster Management

- Super Node Management

- Kubernetes Object Management

- OPS Center

- Log Collection

- Using a CRD to Configure Log Collection

- Audit Management

- Event Management

- TKE Registered Cluster Guide

- Registered Cluster Management

- Ops Guide

- TKE Insight

- TKE Scheduling

- Job Scheduling

- Native Node Dedicated Scheduler

- Cloud Native Service Guide

- Cloud Service for etcd

- Version Maintenance

- Cluster Troubleshooting

- Practical Tutorial

- Cluster

- Serverless Cluster

- Mastering Deep Learning in Serverless Cluster

- Scheduling

- Security

- Service Deployment

- Proper Use of Node Resources

- Network

- DNS

- Self-Built Nginx Ingress Practice Tutorial

- Logs

- Monitoring

- OPS

- DevOps

- Construction and Deployment of Jenkins Public Network Framework Appications based on TKE

- Auto Scaling

- KEDA

- Containerization

- Microservice

- Cost Management

- Hybrid Cloud

- Fault Handling

- Pod Status Exception and Handling

- Pod exception troubleshooter

- API Documentation

- Making API Requests

- Elastic Cluster APIs

- Resource Reserved Coupon APIs

- Cluster APIs

- Third-party Node APIs

- Network APIs

- Node APIs

- Node Pool APIs

- TKE Edge Cluster APIs

- Cloud Native Monitoring APIs

- Super Node APIs

- TKE API 2022-05-01

- Making API Requests

- Node Pool APIs

- FAQs

- TKE General Cluster

- TKE Serverless Cluster

- About OPS

- Service Agreement

- User Guide(Old)

DocumentationTencent Kubernetes EngineTKE General Cluster GuideNative Node ManagementDeclarative Operation Practice

Declarative Operation Practice

Last updated: 2024-06-27 11:09:15

Operations Supported by Kubectl

CRD Type | Operation |

MachineSet | Creating a native node pool kubectl create -f machineset-demo.yaml |

| Viewing the list of native node pools kubectl get machineset |

| Viewing the YAML details of a native node pool kubectl describe ms machineset-name |

| Deleting a native node pool kubectl delete ms machineset-name |

| Scaling out a native node pool kubectl scale --replicas=3 machineset/machineset-name |

Machine | Viewing native nodes kubectl get machine |

| Viewing the YAML details of a native node kubectl describe ma machine-name |

| Deleting a native node kubectl delete ma machine-name |

HealthCheckPolicy | Creating a fault self-healing rule kubectl create -f demo-HealthCheckPolicy.yaml |

| Viewing the list of fault self-healing rules kubectl kubectl get HealthCheckPolicy |

| Viewing the YAML details of a fault self-healing rule kubectl describe HealthCheckPolicy HealthCheckPolicy-name |

| Deleting a fault self-healing rule kubectl delete HealthCheckPolicy HealthCheckPolicy-name |

Using CRD via YAML

MachineSet

For the parameter settings of a native node pool, refer to the Description of Parameters for Creating Native Nodes.

apiVersion: node.tke.cloud.tencent.com/v1beta1kind: MachineSetspec:autoRepair: false #Fault self-healing switchdisplayName: testhealthCheckPolicyName: #Self-healing rule nameinstanceTypes: #Model specification- S5.MEDIUM2replicas: 1 #Node quantityscaling: #Auto-scaling policycreatePolicy: ZonePrioritymaxReplicas: 1subnetIDs: #Node pool subnet- subnet-nnwwb64wtemplate:metadata:annotations:node.tke.cloud.tencent.com/machine-cloud-tags: '[{"tagKey":"xxx","tagValue":"xxx"}]' #Tencent Cloud tagspec:displayName: tke-np-mpam3v4b-worker #Custom display namemetadata:annotations:annotation-key1: annotation-value1 #Custom annotationslabels:label-test-key: label-test-value #Custom labelsproviderSpec:type: Nativevalue:dataDisks: #Data disk parameters- deleteWithInstance: truediskID: ""diskSize: 50diskType: CloudPremiumfileSystem: ext4mountTarget: /var/lib/containerdinstanceChargeType: PostpaidByHour #Node billing modekeyIDs: #Node login SSH parameters- skey-xxxlifecycle: #Custom scriptpostInit: echo "after node init"preInit: echo "before node init"management: #Settings of Management parameters, including kubelet\\kernel\\nameserver\\hostnamesecurityGroupIDs: #Security group configuration- sg-xxxxxsystemDisk: #System disk configurationdiskSize: 50diskType: CloudPremiumruntimeRootDir: /var/lib/containerdtaints: #Taints, not required- effect: NoExecutekey: taint-key2value: value2type: Native

Kubectl Operation Demo

MachineSet

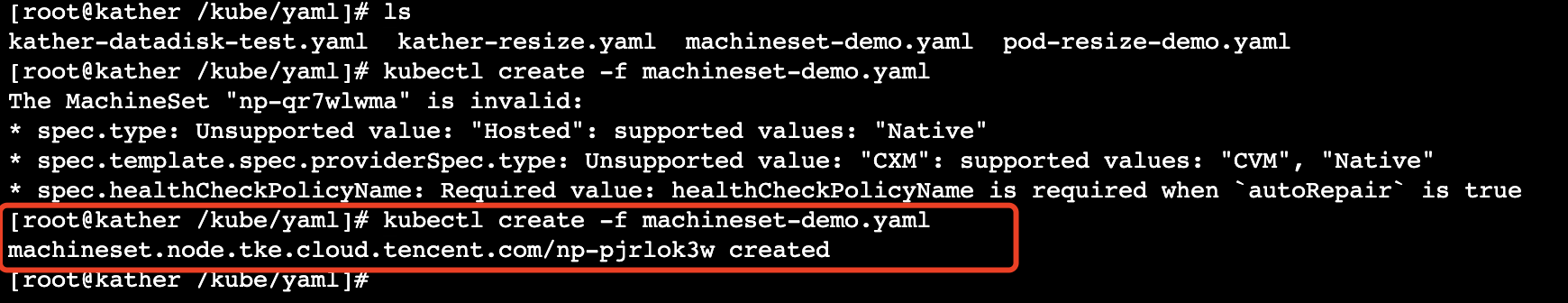

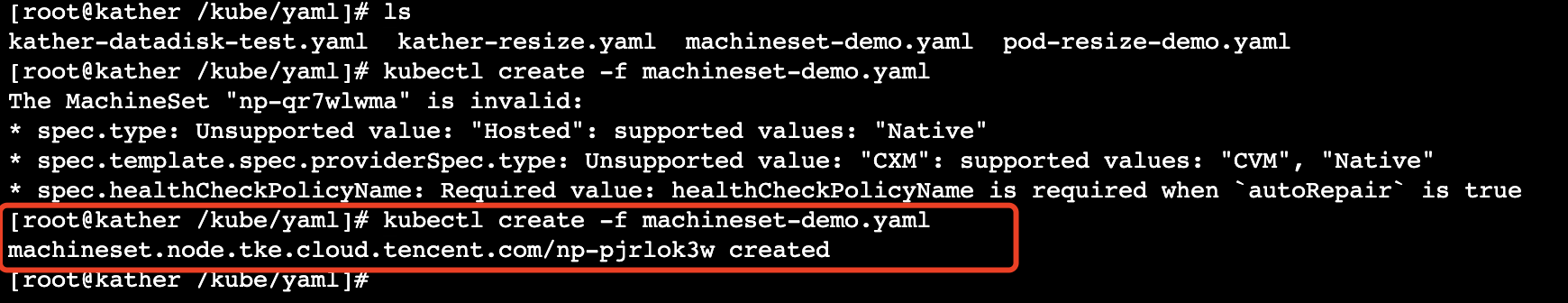

1. Run the

kubectl create -f machineset-demo.yaml command to create a MachineSet based on the preceding YAML file.

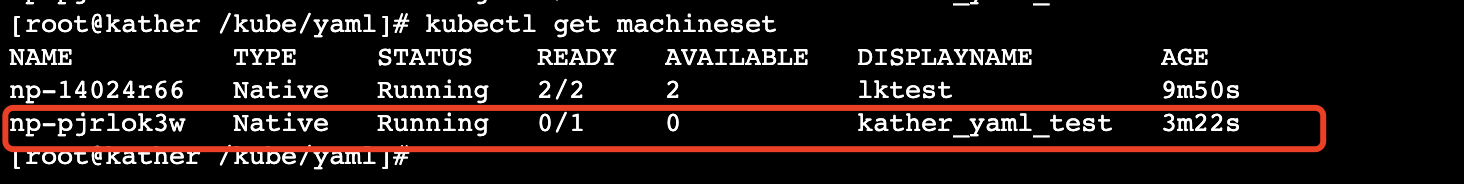

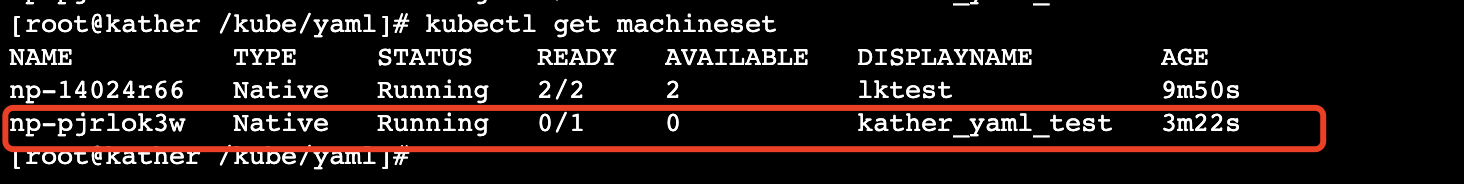

2. Run the

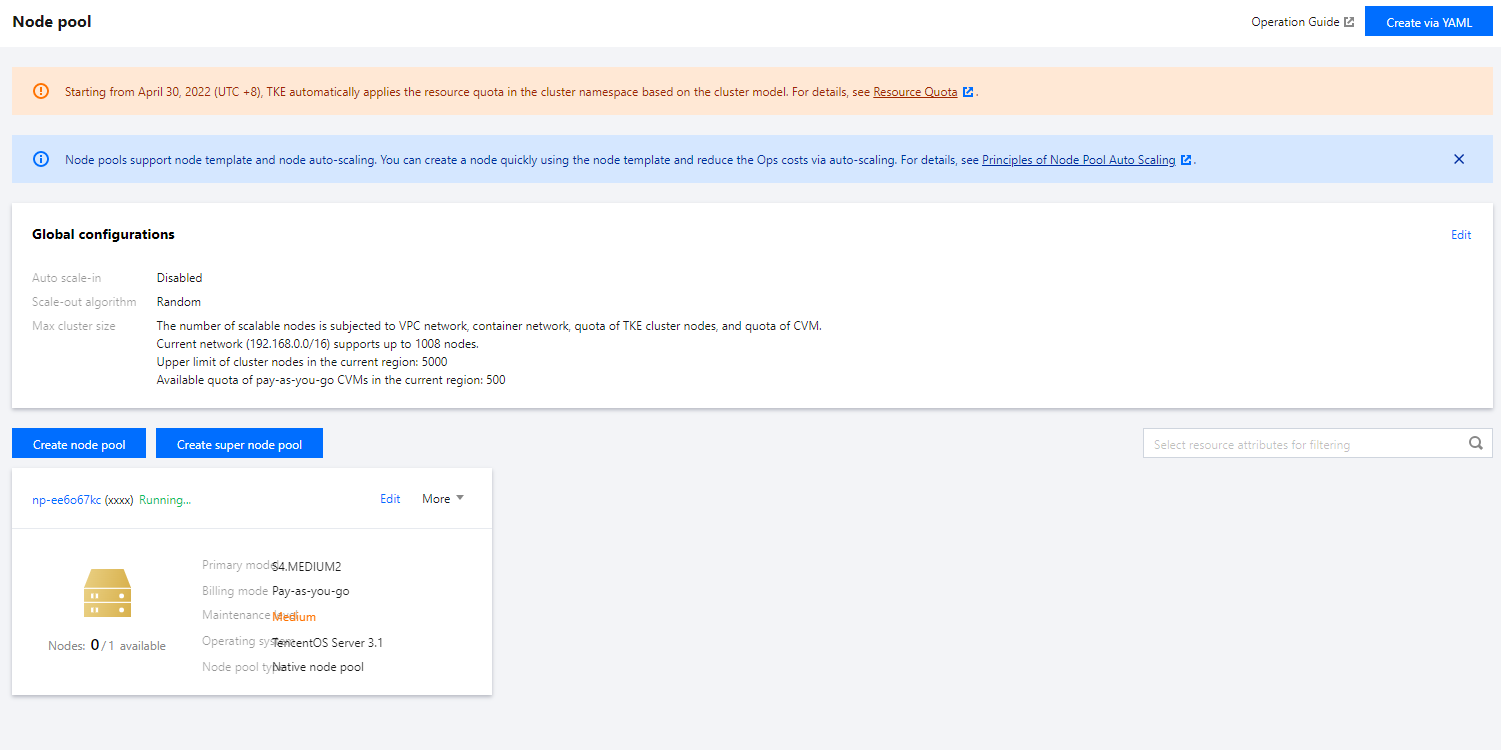

kubectl get machineset command to view the status of the MachineSet np-pjrlok3w. At this time, the corresponding node pool already exists in the console, and its node is being created.

3. Run the

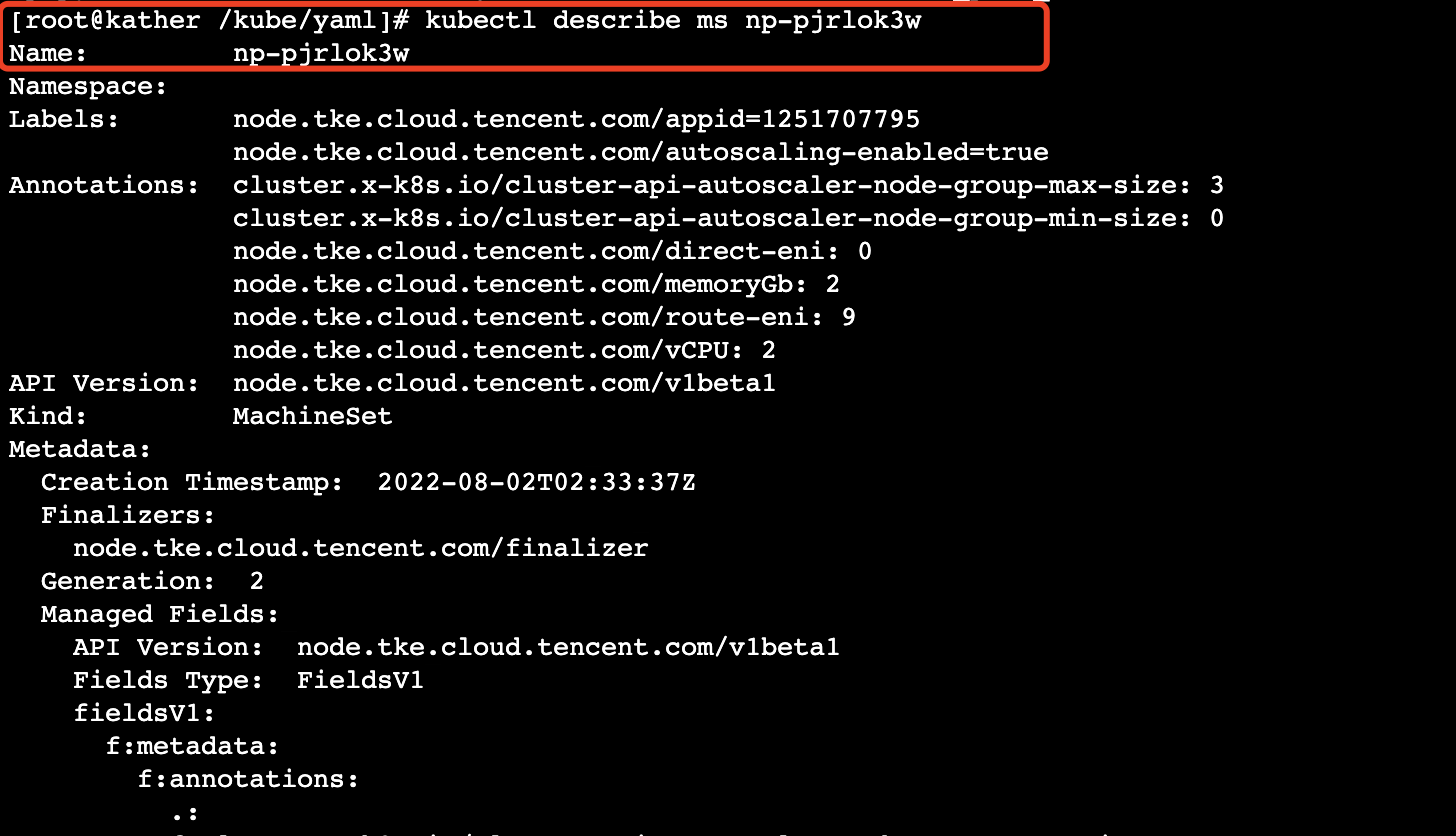

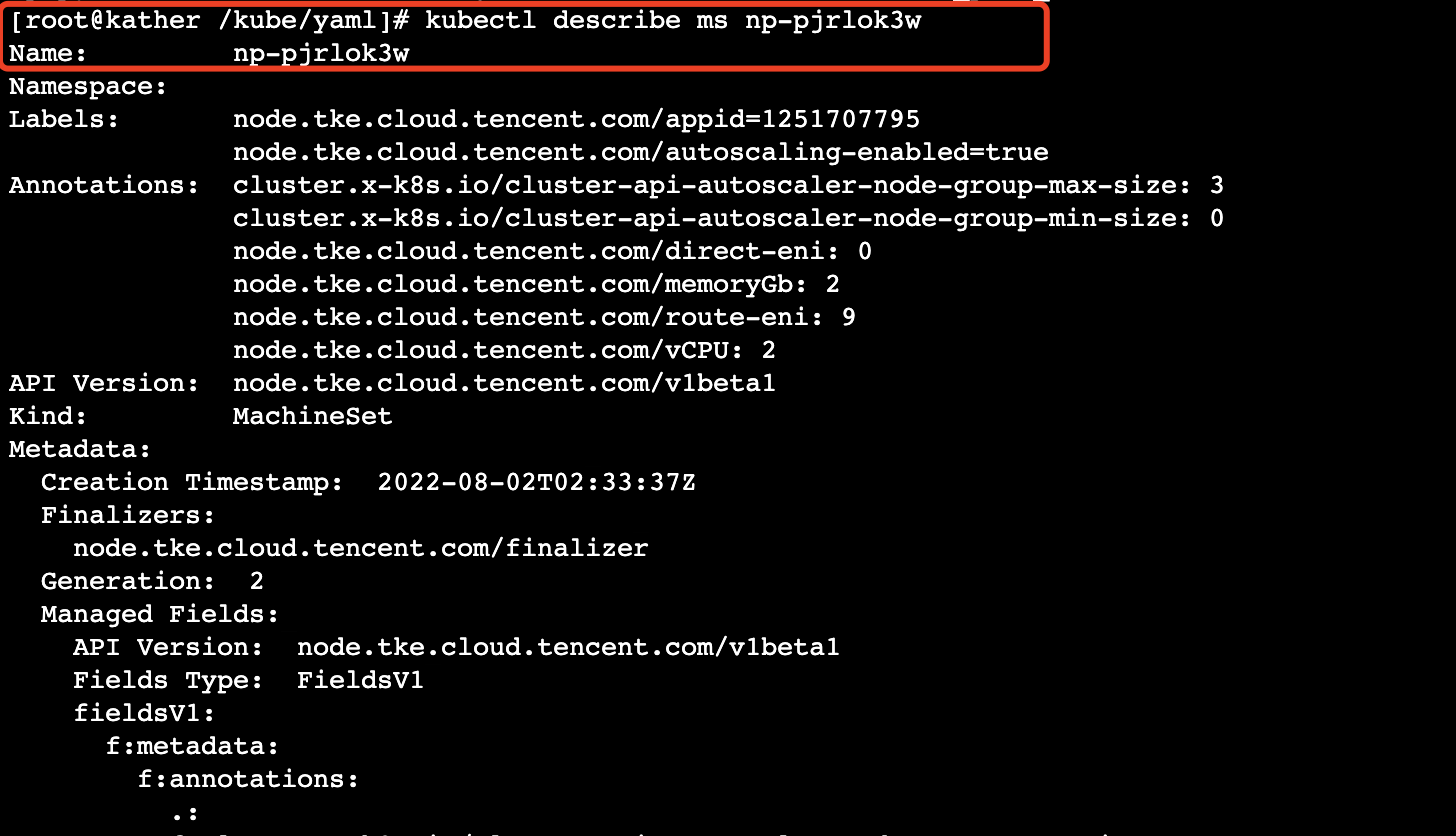

kubectl describe machineset np-pjrlok3w command to view the description of the MachineSet np-pjrlok3w.

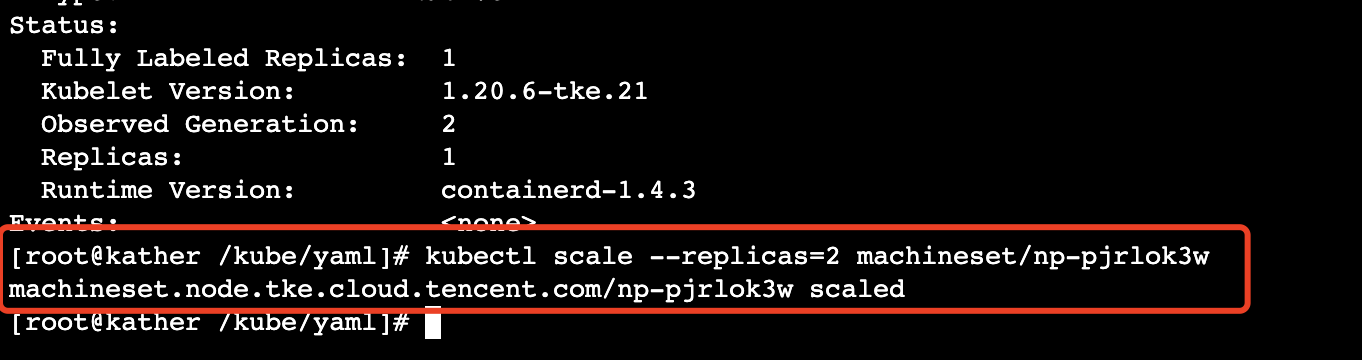

4. Run the

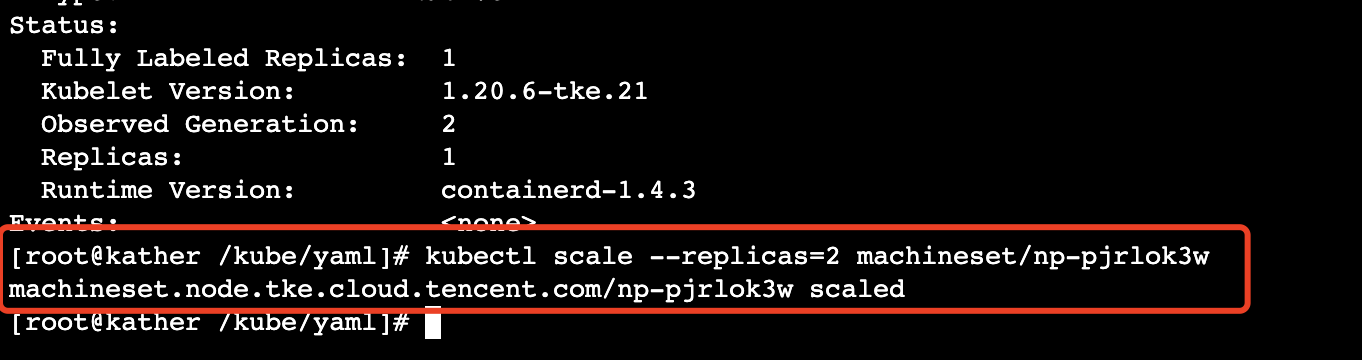

kubectl scale --replicas=2 machineset/np-pjrlok3w command to execute scaling of the node pool.

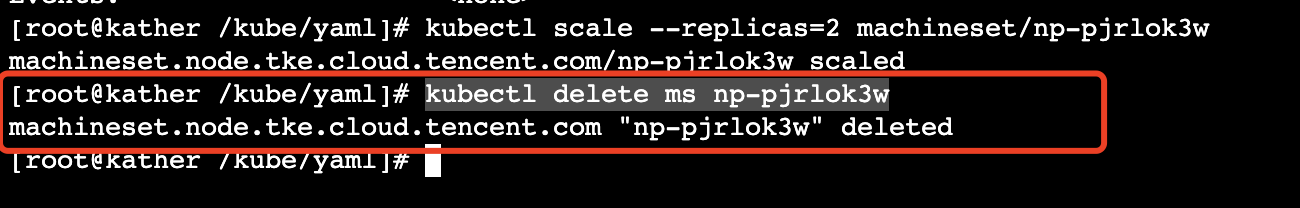

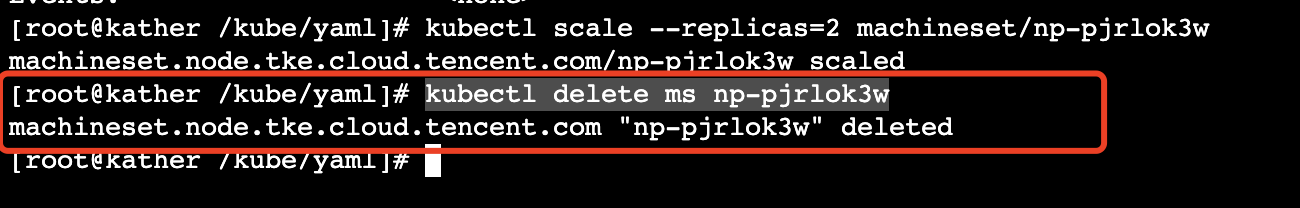

5. Run the

kubectl delete ms np-pjrlok3w command to delete the node pool.

Machine

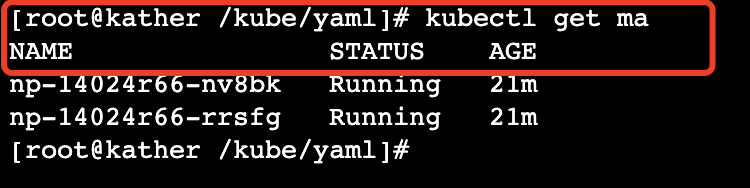

1. Run the

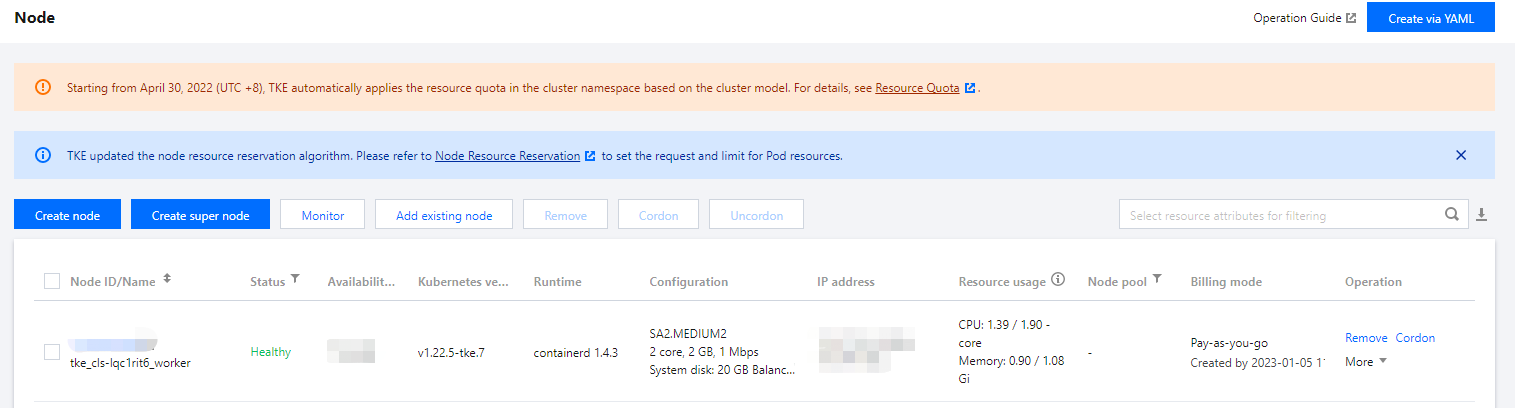

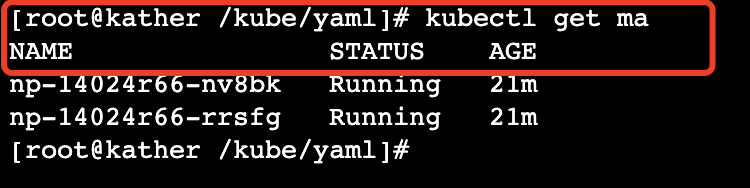

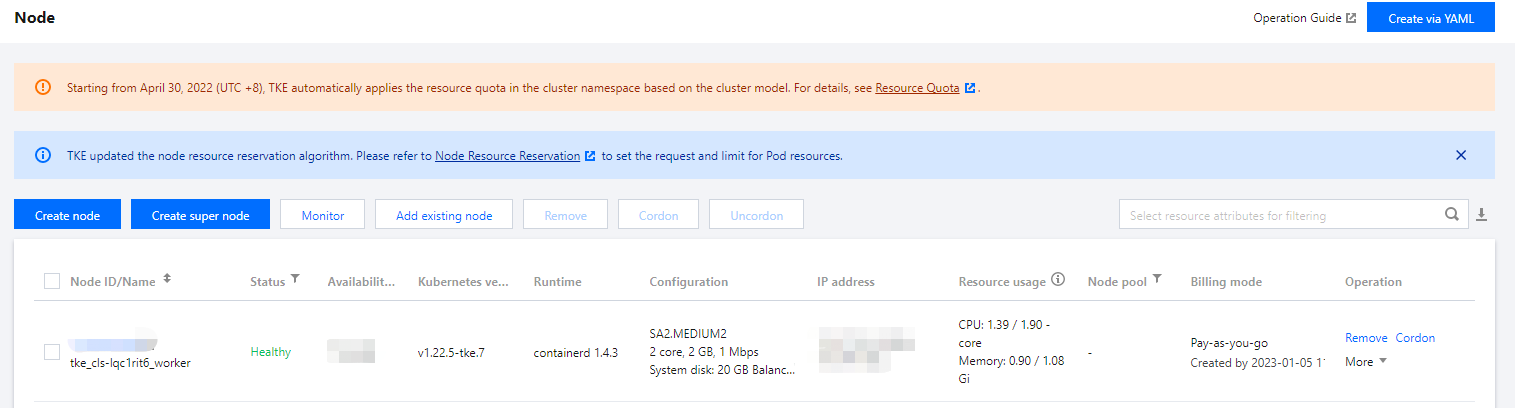

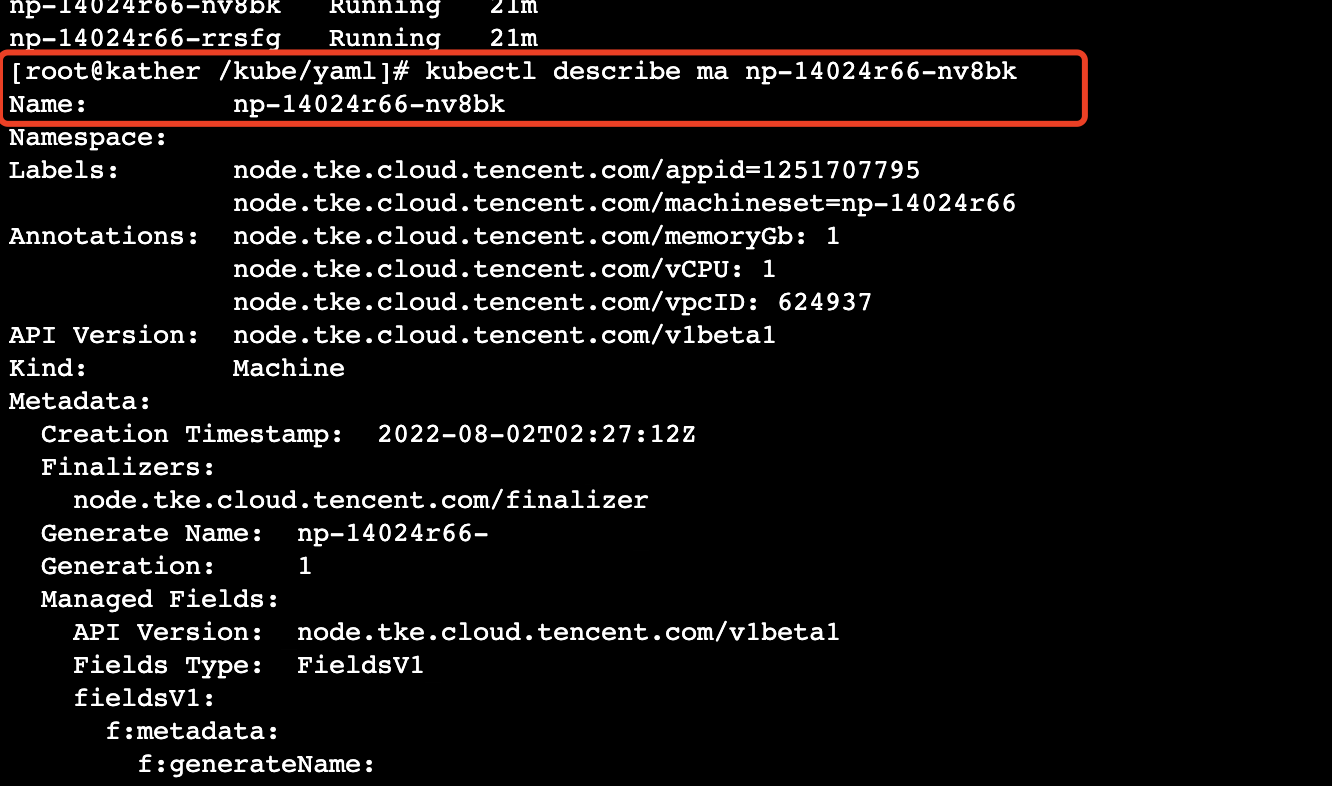

kubectl get machine command to view the machine list. At this time, the corresponding node already exists in the console.

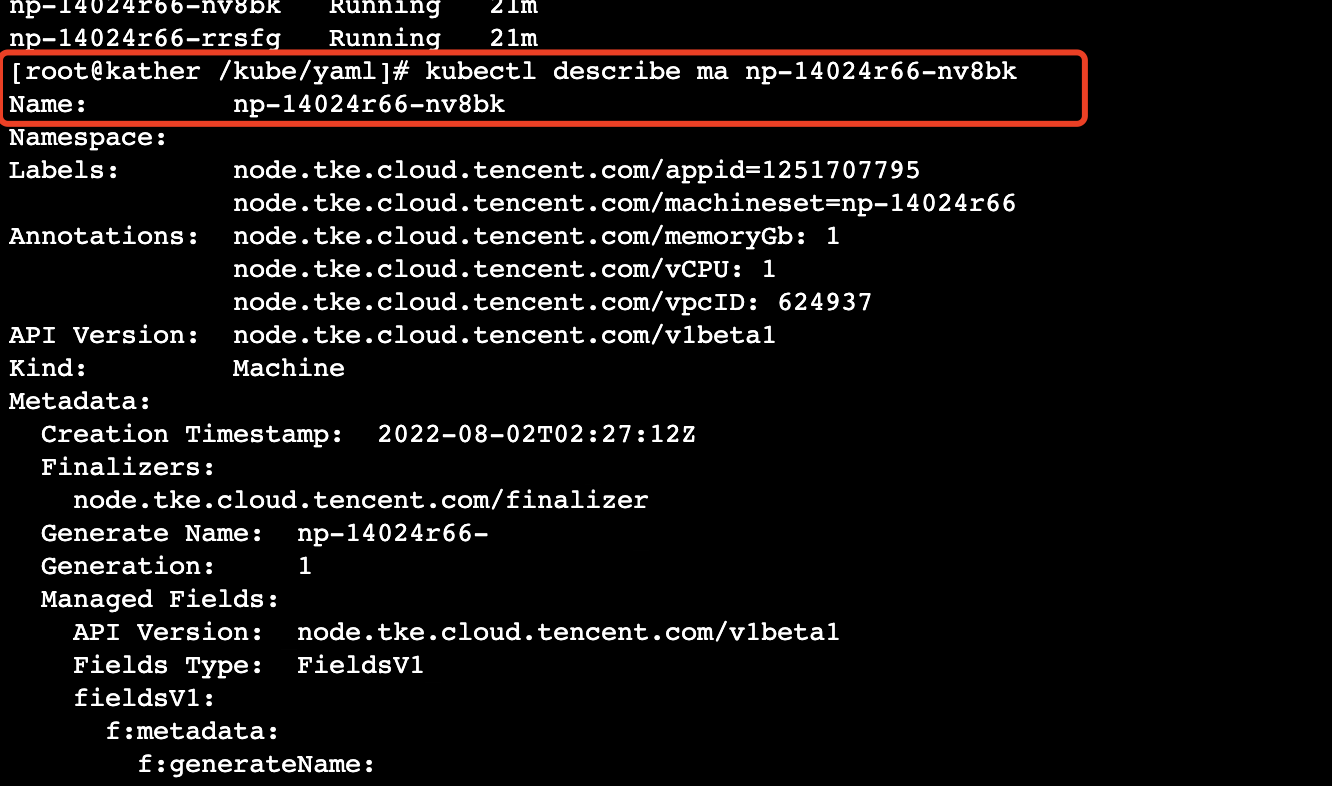

2. Run the

kubectl describe ma np-14024r66-nv8bk command to view the description of the machine np-14024r66-nv8bk.

3. Run the

kubectl delete ma np-14024r66-nv8bk command to delete the node.Note:

If you delete the node directly without adjusting the expected number of nodes in the node pool, the node pool will detect that the actual number of nodes does not meet the declarative number of nodes, and then create a new node and add it to the node pool. It is recommended to delete a node with the method as follows:

1. Run the

kubectl scale --replicas=1 machineset/np-xxxxx command to adjust the expected number of nodes.2. Run the

kubectl delete machine np-xxxxxx-dtjhd command to delete the corresponding node.Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No